A few months ago we wrote an extensive article on sensor crop factors and equivalence. In that post we covered several topics: the history of the cropped-sensor formats, brightness of the scene, perspective, depth of field, noise and diffraction. In today’s post I want to focus on (if you’ll excuse the pun) and expand on two of these topics:

- Perspective

- Depth of Field (DOF)

Nothing in this post has to do with the number of megapixels your camera has, as we will be looking at side-by-side comparisons scaled to the same display size – the same way you would be looking at your photos on your tablet, computer monitor or in your photo albums.

Table of Contents

1) Big vs Small sensor cameras – can they take identical photos?

At the get-go, let’s just establish that you can really take identically-looking photos with two wildly different cameras if you choose your settings appropriately. If you need convincing, just look at the example below. First, we see a photo I took with with my iPhone 6‘s back-facing camera. Below that, you see the photo I took a few seconds later with my Nikon D600 FX DSLR with the Nikkor 24-70mm f/2.8G lens, set to f/16. Notice how similar they are in terms of perspective, focus and background blur. At the same magnification they look pretty much identical. This is no coincidence!

The iPhone has a *tiny* sensor and a *tiny* lens compared to the Nikon, yet we are supposed to believe that size matters. How can we resolve this paradox?

By the way, if you’re observant you have already noticed that the Nikon DSLR image was cropped (slightly) to achieve an identical 4:3 aspect ratio as the iPhone. You’ll also see that the ISO values and shutter speeds for these two photographs were very different. These are interesting and important side notes, but for now do nothing to resolve our paradox.

There is a simple formula that you can use to compute depth of field equivalence – even between a Nikon FX DSLR and an iPhone, as you just saw.

2) How to compare DoF and field of view for cameras with different sensor sizes (crop factors)

How can two cameras that differ so much in physical size and sensor size produce images that, for all purposes, are somewhat indistinguishable?

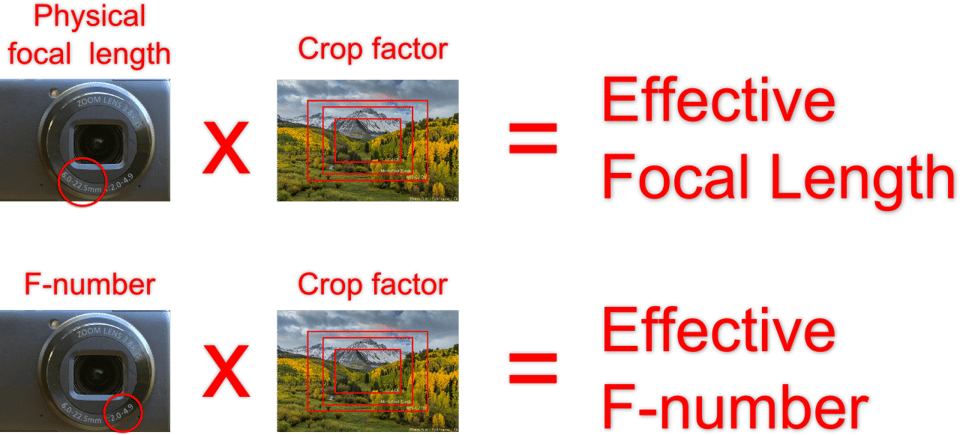

I’ll try to keep it simple and it really is, but you will need to know what a camera’s crop factor is. Then, a simple multiplication does the trick:

The effective focal length (which should in fact be called equivalent field of view, as there is no change in physical focal length) has similar implications as the physical focal length on a larger sensor camera. For example, the reciprocal rule, which states that your hand-held shutter speed should be faster than 1 divided by this number is still true. Field of view is also obviously impacted, which is why the term “equivalent field of view” is more appropriate to use, as you are looking at similar framing, despite differences in physical focal length. The effective f-number on the other hand, is only relevant for depth of field – you won’t use it for calculating the required shutter speed, ISO or anything else.

For two cameras to take identical photos of the same scene (in terms of perspective and depth of field), there are three important requirements:

- The two cameras need to be the same physical distance from the subject you’re photographing.

- The focal lengths of the camera lenses need to be set so that the fields of view seen by the cameras are similar

(= effective focal lengths should be similar). - The lenses’ entrance pupils (the aperture size you see when you look into each lens) must be physically the same (= effective F-numbers must be identical).

E.g.

- A 100mm lens set to f/2.8 on a Nikon FX camera (which has a crop factor of 1.0) gives you the same field of view and depth of field that a 50mm lens set to f/1.4 would on a μ4/3 camera (which has a crop factor of 2.0).

- The iPhone 6 has a crop factor of 7.21, focal length of 4.15mm and a f/2.2 maximum aperture. This iPhone gives you a similar field of view and depth of field as a full-frame camera with a 30mm lens set to f/16 does.

3) Perspective and Field of View

We can now look at these phenomena in slightly more detail. If you want to play with the numbers yourself, there are some DOF calculators that let you do that online.

3.1) Field of View and Subject Size

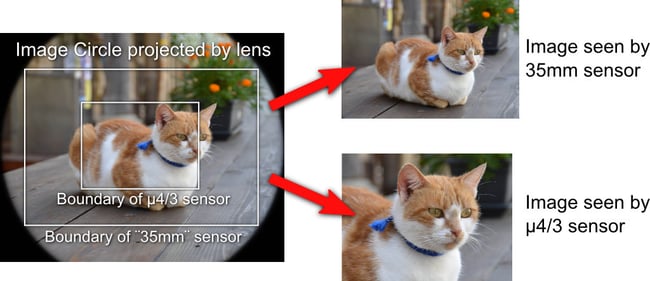

At a given distance from your subject, using a smaller sensor will have the same effect as cropping a portion of your photo from the larger-sensor camera.

The interesting thing to note is that this reduced field of view causes the subject to appear larger when you view the two photos side by side. It is easy to see that you would have gotten the same effect if you zoomed the larger-sensor camera’s lens in more, without changing the distance to your subject. This is why you multiply the lens’ focal length by the crop factor to get the effective focal length / equivalent field of view. More interestingly, this additional magnification also magnifies the background blur, reducing the effective depth of field (more about this later).

3.2) Perspective

In previous articles, we have already established the fact that perspective only changes when camera to subject distance changes and perspective is not impacted by focal length. If you are confused by this, here are some basics to reiterate the point. Foreshortening refers to the phenomenon of how perceived size changes with its distance from the observer, and the changing relative sizes of background and subject.

Foreshortening plays a big role in art, and has been extensively studied over the ages. Artists originally only needed to learn the way in which distant objects appear smaller to a human observer. Humans view the world through their eyes which have a constant focal length of approximately 22mm f/2.1, but has an effective focal length of 43mm. Could you therefore say that our eyes have a crop factor of 2.0? More details can be found here.

Our camera gear gives us the opportunity to change focal length. Essentially, longer focal lengths reduce the relative differences in size between a subject and the distant background, whereas wide angles exaggerate this difference.

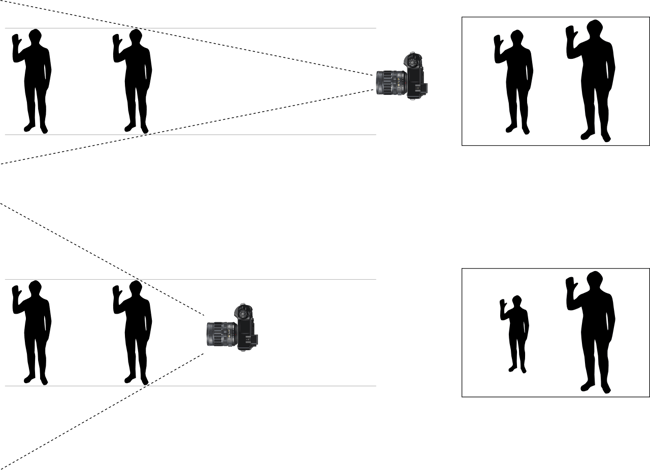

Perspective (i.e. relative sizes of different objects in the frame) only depends on the distance from the subject. For this reason, two cameras with different size sensors need to be at the same distance from their subjects in order to create photographs with a similar perspective.

4) Depth of Field

4.1) What happens when an object is “not in focus”

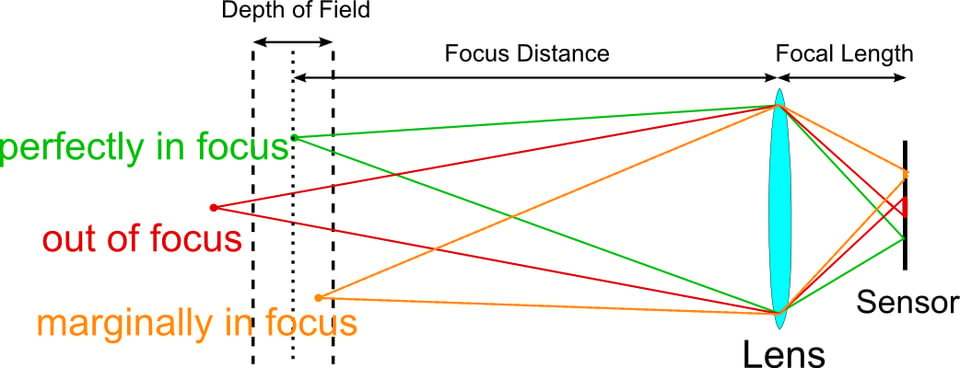

First let’s look at what happens in the imaging process. The lens model in the diagram is much simplified, but captures the essential process:

There is one specific distance at which the lens focuses. This distance (called the focus distance) can be adjusted, but at any given instant all points at this distance are projected as points on the image plane.

Whenever a point is further away from the lens than this unique focus distance, its light rays don’t focus on the sensor any more, but intersect somewhere in the air in front of the sensor. These light rays again diverge after their crossing point, ending up as a blurred circle on the sensor. Similarly, when an object is closer than the focus distance, the corresponding light rays hit the sensor before they converge, also forming a blurred circle on the sensor. You may now better understand the pretty bokeh circles that you get when you photograph distant lights while the lens is focused on a nearby subject. The shape of this blurry spot is actually the same as the shape of the lens aperture, and can be manipulated into shapes by using using a paper cut-out to make an aperture into a custom shape like a heart.

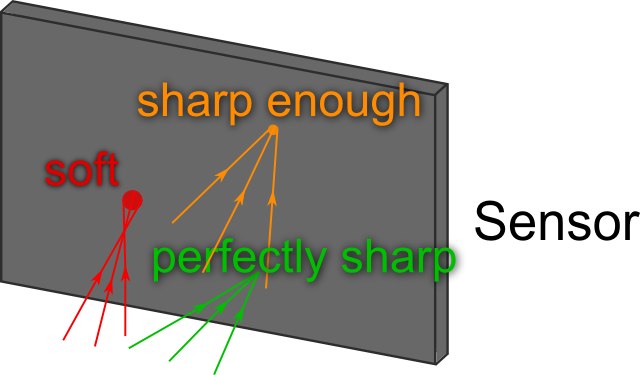

4.2) Circle of Confusion

As we saw from the light ray diagram above, there is only a single distance at which even an ideal lens will focus the image perfectly. A point source at any other distance is blurred to a circular blob on the image plane that is called the circle of confusion (CoC). In practice, however, there is a range in which the CoC is imperceptible, as your eyes aren’t good enough to tell that the light is blurred to form a non-zero-sized disc. The transition from imperceptible to perceptible blur varies from person to person but on average subtends an angle of approximately 1 arc minute, as seen from your eye.

Now we can define Depth of Field: it is the region where the CoC is less than a certain value, i.e. where the entire image or a particular area of the image is perceived to be “sharp enough”. Depth of field is therefore based on some definition of “acceptable” sharpness and is essentially an arbitrary specification. The CoC cut-off size that defines depth of field ultimately depends on the resolution of the human eye, as well as the magnification at which the image is viewed.

At normal reading distance 1 arc minute represents a diameter of about 0.063 mm on a piece of paper – about as wide as the thickness of a human hair. Interestingly, the photographic community long ago and for some unclear reason decided that they will instead settle for a coarser limit to the circle of confusion, roughly 0.167 mm, which means you might find depth of field scales printed on some lenses to be a bit over-optimistic. These DOF scales are therefore more a crude rule of thumb than anything useful.

4.3) Three examples of how a smaller sensor influences Depth of Field

4.3.1) Smaller Sensor = decreased depth of field (if identical focus distance, physical focal length and physical f-number)

When you put photographs from two cameras next to each other to compare them, you are typically looking at these images at the same size. However, the image sensors that generated these two images may be very different in size. For example: the iPhone has a sensor that is less that one seventh the size of a 35mm full-frame DSLR in each of its dimensions. This means that the physical image that was projected onto the image plane of the iPhone was magnified by a factor more than seven times more than the DSLR’s image so that it could be displayed at the same size in the side-by-side comparison in this post.

This magnification magnifies everything – also imperfections and blurring in the projected image. This means that, at the same distance from your subject, at the same physical focal length and aperture setting, a camera with a smaller sensor will have shallower depth of field than the one with a larger sensor. The images will have the same perspective, but different fields of view (framing), so it is a bit of an apples and oranges comparison. However, the result is real, and goes contrary to common knowledge and what one might have expected!

4.3.2) Smaller Sensor = increased depth of field (if identical focus distance, effective focal length and physical f-number)

As we saw, the effective f-number of a camera with a smaller sensor in terms of depth of field is higher by a factor equal to its crop factor. This is because at a given distance from your subject, depth of field depends on the physical entrance pupil size. To have an equivalent field of view, the smaller-sensor camera needs to have a shorter physical focal length. At the same physical f-number this corresponds to a smaller entrance pupil size, and hence deeper depth of field.

4.3.3) Smaller Sensor = increased depth of field (if identical subject size, physical focal length and physical f-number)

With the same physical focal length, the smaller-sensor camera has a tighter field of view. In order for the subject of the smaller-sensor camera to fill the same proportion of the frame as in the larger-sensor camera, we have to be further away from it. Moving further away from the subject increases the focusing distance, which strongly increases depth of field. Very roughly speaking, depth of field increases with the square of the focus distance. This effect is counteracted by the shallower depth of field due the increased magnification explained earlier, but because of the more powerful square-law relationship the increase in DoF dominates, yielding a net increase.

For the actual mathematical equations, follow this link.

5) The Bottom Line

With two cameras that have very different size sensors you can take photographs that look exactly the same, in terms of Depth of Field and Perspective. However, a large sensor camera gives you more creative freedom in the ability to isolate your subject from the image background.

You cannot simply substitute full-frame lenses with “equivalent” focal length alternatives on smaller sensor cameras because f/2.8 may not be the same f/2.8 you’re used to. And if you look at really fast lenses that give you similar results in terms of subject isolation capabilities (f/1.4 or faster lenses), you might be a bit disappointed to find out that they are either more expensive, or do not offer autofocus capabilities.

In the above case, Olympus should actually be offering you a 12-35mm f/1.4 lens to really give you a similar versatility that the full-frame 24-70mm f/2.8 lens offers. Unfortunately, such a lens does not exist, no matter how much money you want to spend. You simply cannot beat physics!

Nothing has really changed since the film days – larger film and larger sensors have a head start in capturing more detail, to produce cleaner images and a better ability to isolate subjects. Am I saying that bigger is always better? No, of course not, as there are pros and cons to each system. In terms of pure detail and image quality, smaller cameras today produce stunning images that large sensor cameras could not match just a few years back. The degree to which a larger sensor’s possibilities are realized depends in part on the skill of the camera manufacturer. Furthermore, these advantages have implications on size, weight and cost. For many photographers the trade-offs that a large-sensor system demands are too much to embrace.

The choice of a camera system today boils down to one’s needs. For most photographers out there, smaller systems are going to be more practical. Professionals and aspiring professionals will be choosing larger systems due to the above-mentioned advantages. Hence, there is no right or wrong in picking one system over another.

“Nothing has really changed since the film days – larger film and larger sensors have a head start ….. and a better ability to isolate subjects.”

Yes, but isolating subjects isn’t always desirable, as I discovered many years ago shooting architectural subjects with a 4×5 view camera. Too *little* DOF was often a problem, requiring apertures of f32 and f64, to capture the desired image. Apertures with, not surprisingly, create exposure issues in anything but bright light.

There’s no “best” format, there’s only “best for the job at hand.” Sometimes that’s MF or even large format, sometimes it’s M43, and sometimes it’s a cell phone. The tendency of “full frame” shooters to view everything in terms of the ability to get shallow DOF has stifled creativity. I find many of my own favorite photos are ones with deep DOF, where I can see clearly into the distance, and the subject is shown is relationship to its environment, rather than isolated from it.

For “4.3.1) Smaller Sensor = decreased depth of field (if identical focus distance, physical focal length and physical f-number)”, I think it should be “same depth of field”? As illustrated in your depth of field illustration in 4.1), the depth of field is not a function of sensor size. It is only decided by focal distance, physical focal length and aperture?

A smaller sensor, or a crop from the larger sensor, requires more image-space magnification to produce the same size print. Therefore, all out-of-focus blur will be magnified, resulting in a decreased depth of field.

This is why we scale the circle of confusion with sensor size, or crop size:

en.m.wikipedia.org/wiki/…_on_d/1500

Hey, you have written a great content and loved the detailed search you have done for your article. Have a look at 10 Best Applocks For Android 2018 For Better Security gadgetsworm.com/10-be…-security/

Francois – thanks for your article and the depth of your presentation. Despite this, I think your article makes a simple concept complex and some of your conclusions, though technically accurate, are misleading in the real world. Your opening comparison between the iPhone photo and the d600 shot really contains all the information we need. If we extrapolate from that to comparisons between other lenses, fx on fx sensors, dx on dx sensors, fx on dx sensors and m4/3 on m3/4 sensors, we can estimate how to create the appropriate field of view to establish similar shots with similar DOF. To imply that the two 17-35 lenses (one equivalent and the other fx) cannot produce identical images, or that Olympus m4/3 lenses of 12-35 (Olympus produces a 12-40 f2.8 and Panasonic a 12-35 f2.8) defy physics, is pedantic. Yes they are not the same, but they can produce identical images just as your iPhone and d600 can.

Hey David, sadly for many people today with far too limited attentions spans the “opening comparison between the iPhone photo and the d600 shot really contains all the information we need” is probably all they can be bothered to absorb. However, for those of us who (even if we already knew 60-80% of the facts) are really interested in understanding this stuff, this article was brilliantly written, comprehensive, even if at times made you stop and think it over. If to you that is simply “pedantic” then that is a sad reflection on today’s ‘don’t do detail / don’t have time for all this’ society, but after searching around for some time this was by far and away the best resource I could find on the subject and I applaud the author.

Hi. Great comments and great article. Can anyone answer this simple question for me about FX and DX cameras and lenses?? It is 2 parts for your comments/help. First, compare the same photo taken with a different FX lens, on both cameras, and then with the exact same FX lens, on both cameras.

If I want to get the exact same original photo (taken with a fixed prime FX 85mm 1.8 on a FX camera) on a DX camera will both of these 2 ways accomplish it?

Scenario #1 Adjust for the 1.5 crop factor by adjusting both the lens and aperture i.e. use a 58mm 1.2 (87mm DX equiv). I saw a good article doing this with a 58mm 1.4 and it was almost exactly the same except with the 58mm photo the buildings in the far far background had a little less blur. I don’t know why? Unless the distant DOF was somehow still extended slightly because it was a 1.4 and not 1.2? Here is the link, very good example,

neilvn.com/tange…-of-field/

Scenario #2 I use the exact same Fixed Prime lens (FX 85 1.8) on a DX crop-sensor. If I move backwards and focus with my feet say from 10 feet to 15 feet. I have adjusted for the FOV~Angle of View (same Framing) but the DOF is now different i.e. deeper/extended so I have less bokeh blur by a few stops or 1.5 margin. Is this correct and there is absolutely no way to counter this effect when you do the swap with a Fixed Prime lens and not a variable aperture FX lens? Because I can not change the aperture on this particular lens to 1.2. then using this specific lens on the DX camera, will never give me the same look/photo because of the DOF increase. Correct?

Thanks, G

The 1.5 crop factor works for focal length and aperture (regarding depth of field). A 35mm sensor with an 85mm f1.8 lens will look the same as a 56mm f1.2 on a sensor with a 1.5 crop factor.

85mm / 1.5 = 56.67mm

This means you will get the same composition at the same distance to the subject.

f1.8 / 1.5 = f1.2

This means you will the same depth of field at the same distance to the subject.

As you wrap your head around that you realize that larger sensors have a natural advantage in terms of wider views and shallower depth of field. Also, people complain about lens size and price at full frame but when you consider that a 2.8 zoom micro 4/3 lens costs 800$ and is talking pictures the same as a 5.6 zoom lens on full frame. You can get a super zoom lens with wider variable aperture for the same price. I use Sony A7s “FX” with fast primes, an A6000 “DX” with a constant f4 zoom and a Panasonic MFT G7 with the kit and a couple other fast lenses. Each has advantages over the other and I use them all.

I like using the mft system because I can’t push the iso up and keep shutter above 1/200 for every shot like I can the A7s, so it challenges me and opens up my eyes to slower shutter speed shooting which has its place in my heart. The A7s is the I’m inside and I have to get the moment no matter what, and it doesn’t disappoint. The a6000 is one that I would loan friends or students to learn on. It’s not great at anything but decent at everything.

Hope that helps,

Hi,

I have (maybe) another point of view on that matter. And of course I am not 100% sure I’m right.

Your 85mm has different field of views depending you put it on a FX or DX sensor. The depth of field AND background compression WILL REMAIN THE SAME.

If you put your lens on DX, you will have to move backwards, and then change your distance to the subject, then that changes the background compression. The depth of field will remain the same. And now you will have the same field of view than on FX.

I reformulate.

A 85mm f/1.8 will always remain a 85mm f/1.8 whatever you put it on FX, DX, M4/3, etc. If you stay at the same distance from the subject, the only thing that will change is the crop in the field of view. The DOF and background compression will not change.

So it’s impossible IMO to compare this lens on different sensor size, or different lenses, because :

1. if you change your distance to the subject, you change the background compression

2. if you change the focal length, you change the background compression

The problem and difficulty is more a background compression problem than a DOF problem.

In my case, on “FX” I have these 3 lenses :

50mm f/0.95

85mm f/1.4

135mm f/1.8

The 50mm will ALWAYS have the shallowest DOF

The 135mm will ALWAYS have the strongest background compression

(and I can say that a 85mm f/1.8 has the same DOF than the 135mm but not the same background compression, and fov of course)

So when I compare pictures from these different lenses it is very difficult to see “in real life” which one has the shallowest DOF, because the background compression gives the IMPRESSION of a shallower DOF. You see what I mean ?

In theory a f/0.95 lens has shallower DOF than a f/1.4 or f/1.8 lens. But because a longer lens has a stronger background compression, it gives the impression “in real life” of a shallower depth of field, or better said, “a stronger subject isolation”.

With longer focal lengths the circles of confusion “lens blur” are projected onto more pixels of the sensor. The depth of field is actually not different but can appear shallower in your final frame.

If you shot the same subject without moving with the 50mm at 1.4 and the 85mm at 1.4 and then cropped your 50mm image to share the same composition. You will get the same looking blur from both. If you take a blurry 24MP picture and shove the pixels together to fit in an 8MP file, the actual image is sharper (the blurry edges take up less pixels).

Lenses do a similar thing with light. This is essentially why a wide angle 2.8 lens appears to have a deeper depth of field. It’s shoving a huge amount of light information onto the small sensor size. A telephoto 2.8 lens isolates a small amount of light information and spreads it onto the same sensor size. AKA the blurred parts render larger in the final digital file even though the actual lens aperture is the same.

Hi !

Thank your for this great post.

I have a question.

Camera : full frame

1) Which one of these lenses has the shallower depth of field, compared at the same (or comparable) field of view : 135mm f/2, 85mm 1.4 or 50mm 0.95.

2) Which one of these lenses has the shallower depth of field for taking a full body “portrait”.

I’m struggling to find an answer.

I figure it out !

1) the 50mm 0.95

2) the 50mm 0.95 but with the perspective compression, the blurry background caused by the 135mm will isolate the subject more

At a comparable field of view, only a lens with the same aperture will have the same depth of field.

Only the perspective and field compression will change, giving a different perception of the subject in relation to the environment.

Hi & Happy New Year! Ok I’m not anywhere near as knowledgeable (or emotionally invested ;) as most of your commenters here but I am wondering about this subject specifically. I just got an Olympus OM-D 5 Mark II with a 25mm f1.8 lens.

I like a lot about it except I’m not entirely happy with the portraits it’s produced. I can’t exactly put my finger on it but there is something harsh about them. It doesn’t matter what lighting I use it seems that if there is a wrinkle or a blemish on a face it becomes more sharp/defined in the photo, stands out more than it should. Is this a lens issue, an operator issue, or is this the sensor magnification you were talking about?

I bet William Fox Talbot never had to bother with all of this!

Thanks though for sharing so much research freely on the web… and for the discussion it generated. Useful stuff.

My observation, however, is that photographers need to remember their photography first and may be keep all this technological stuff for the long dark winter evenings… how many great shots have people missed because they’ve been looking under the hood/bonnet for too long?

As I write this there is the most amazing rain and sun combination outside my window, so excuse me while I just go and seize the moment…

It’s usually easier to understand something if you try it yourself, so I did:

www.dankspangle.com/

As my friend Hamish commented: “It’s a simple as this really; the larger the sensor the closer you have to get to fill the frame with a given focal length. The closer you get the narrower the depth of field.”

Wow. What a good article. It seems like a chapter in a comprehensive book on photography. Carefully and clearly written and beautifully illustrated. Well done, Francois.