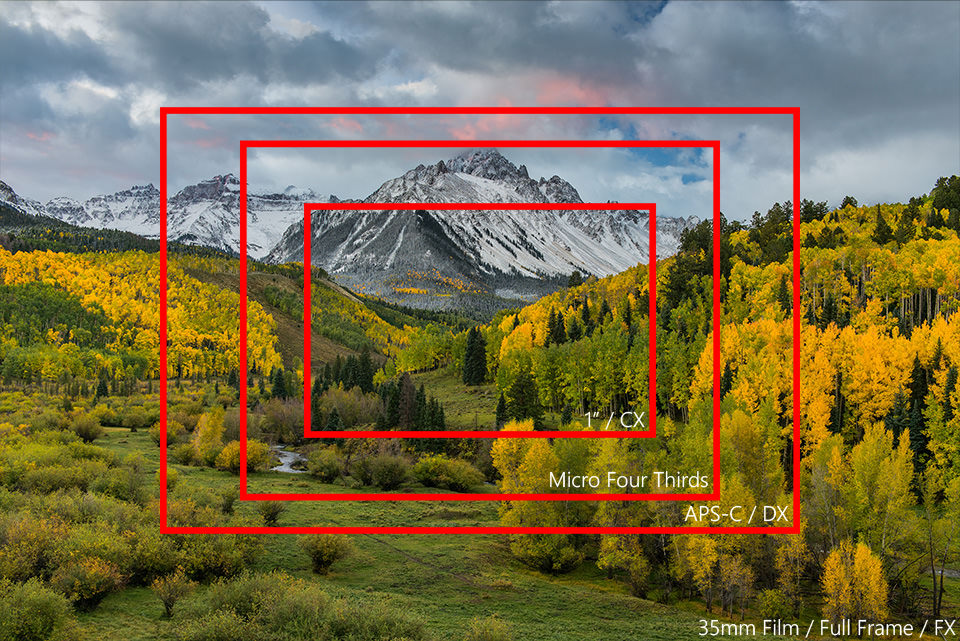

The subject of sensor crop factors and equivalence has become rather controversial between photographers, sparking heated debates on photography sites and forums. So much has been posted on this topic, that it almost feels redundant to write about it again. Sadly, with all the great and not-so-great information out there on equivalence, many photographers are only left more puzzled and confused. Thanks to so many different formats available today, including 1″/CX, Micro Four Thirds, APS-C, 35mm/Full Frame, Medium Format (in different sizes), photographers are comparing these systems by calculating their equivalent focal lengths, apertures, depth of field, camera to subject distances, hyperfocal distances and other technical jargon, to prove the inferiority or the superiority of one system over another. In this article, I want to bring up some of these points and express my subjective opinion on the matter. Recognizing that this topic is one of the never-ending debates with strong arguments from all sides, I do realize that some of our readers may disagree with my statements and arguments. So if you do disagree with what I say, please provide your opinion in a civilized manner in the comments section below.

Before we get started, let’s first go over some of the history of sensor formats to get a better understanding of the past events and to be able to digest the material that will follow more easily.

Table of Contents

1) The Birth of the APS-C Format

When I first started my journey as a photographer, the term “equivalent” was very foreign to me. The first lens I bought was a kit lens that came with my Nikon D80 – it was the Nikkor 18-135mm DX lens, a pretty good lens that served as a learning tool for a beginner like me. When I researched about the camera and the lens, references to 35mm film did not bother me, since I had not shot film (and thus did not use a larger format than APS-C). At the time, Nikon had not yet released a full-frame camera and few could afford the high-end Canon full-frame DSLRs, so the term “equivalent” was mostly targeted at 35mm film shooters. But why did the first DSLR cameras have sensors that were smaller than the classic 135 film frame? Why do we even have the question of equivalence that’s on the minds of so many photographers?

Today, APS-C (or any other smaller-than-full-frame format for that matter) is marketed as the compact and inexpensive choice, and the market is filled with DSLRs and other compact / interchangeable lens cameras. With smaller sensors come potentially smaller, lighter camera bodies and lenses. But it wasn’t always like that, and it certainly was not the reason why APS-C took off as a popular format. Due to technical issues with designing large sensors and their high cost of manufacturing, it was challenging for camera manufacturers to make full-frame digital cameras at the time. So smaller sensors were not only cheaper to make, but were also much easier to sell. More than that, the APS-C / DX format was not originally intended to be “small and compact”, as it is seen today. In fact, the very first APS-C cameras by both Nikon and Canon were as big as the high-end DSLRs today and certainly not cheap: Nikon’s D1 with a 2.7 MP APS-C sensor was sold at a whopping $5,500, while Canon was selling a lower-end EOS D30 with a 3.1 MP APS-C sensor for $3K.

As a result of introducing this new format, manufacturers had to find a way to explain that the smaller format does impact a few things. For example, looking through a 50mm lens on an APS-C sensor camera did not provide the same field of view as when using that same lens on a 35mm film or a full-frame digital camera. How do you explain that to the customer? And so manufacturers started using such terms as “equivalent” and “comparable” in reference to 35mm, mostly targeting existing film shooters and letting them know what converting to digital really meant. Once full-frame cameras became more popular and manufacturers produced more cheaper and smaller lenses for the APS-C format, then we started seeing “advantages” of the smaller format when compared to full-frame. Marketers quickly moved in to tell the masses that a smaller format was a great choice for many, because it was (or, actually, has become) both cheaper and lighter.

To summarize, the APS-C format was only born because it was more economical to make and easier to sell – it was never meant to be a format that competes with larger formats in terms of weight or size advantages as it does today.

2) The Birth of APS-C / DX / EF-S Lenses

Although the first APS-C cameras were used with 35mm lenses that were designed for film cameras, manufacturers knew that APS-C / crop sensors did not utilize the full image circle. In addition, there was a problem with using film lenses on APS-C sensors – they were not wide enough! Due to the change in field of view, using truly wide angle lenses for 35mm film was rather expensive, choices were limited and heavy. Why not make smaller lenses with a smaller image circle that can cover wider angles without the heft and size? That’s how the first APS-C / DX / EF-S lenses were born. Nikon’s first DX lens was the Nikkor 12-24mm f/4G lens to cover wide angles and Canon’s first lenses were the EF-S 18-55mm f/3.5–5.6 and EF-S 10-22mm f/4.5–5.6, which were also released to address similar needs, but for more budget-conscious consumers. Interestingly, despite the efforts by both manufacturers to make smaller and more affordable lenses, neither DX, nor EF-S lines really took off. To date, Nikon has only made 23 DX lenses in total, only two of which can be considered of “professional” grade, while Canon’s EF-S lens line is limited to 21 lenses, 8 of which are variations of the same 18-55mm lens. Canon does not have any professional grade EF-S “L” lenses in its line. So the idea of providing lightweight and smaller lens options of high grade was not something Nikon or Canon truly wanted to do, when they could crank out lenses for full-frame cameras.

3) The Need for Lens Equivalence and Crop Factor

Since the APS-C format was relatively new and the adoption rate of 35mm film cameras was very high in the industry, field of view equivalence often expressed as “equivalent focal length” made sense. It was important to let people know that a 50mm lens gave a narrower field of view on APS-C, similar to a 75mm lens on a 35mm film / full-frame camera. Manufacturers also came up with a formula to compute the equivalent field of view in the form of a “crop factor” – the ratio of 35mm film diagonal to the APS-C sensor diagonal. Nikon’s APS-C sensors measuring 24x16mm have a diagonal of 29mm, while full-frame sensors measuring 36×24 have a diagonal of 43mm, so the ratio difference between the two is approximately 1.5x. Canon’s APS-C sensors are slightly smaller and have a crop factor of 1.6x. So calculating the equivalent field of view got rather simple – take the focal length of a lens and multiply it by the crop factor. Hence, one could easily calculate that a 24mm lens on a Nikon DX / APS-C camera was similar to a 36mm lens on a full-frame camera in terms of field of view.

However, over time, the crop factor created a lot of confusion among beginners. People started to say things like “Image was captured at 450mm focal length”, when in fact they shot with a 300mm lens on an APS-C camera. They felt like they could say such things, thinking their setup was giving them longer “reach” (meaning, allowing to get closer to action), while all it did was give them narrower field of view due to sensor cropping the image frame. So let’s establish the very first fact: the focal length of a lens never changes no matter what camera it is attached to.

4) Lens Focal Length vs Equivalent Focal Length

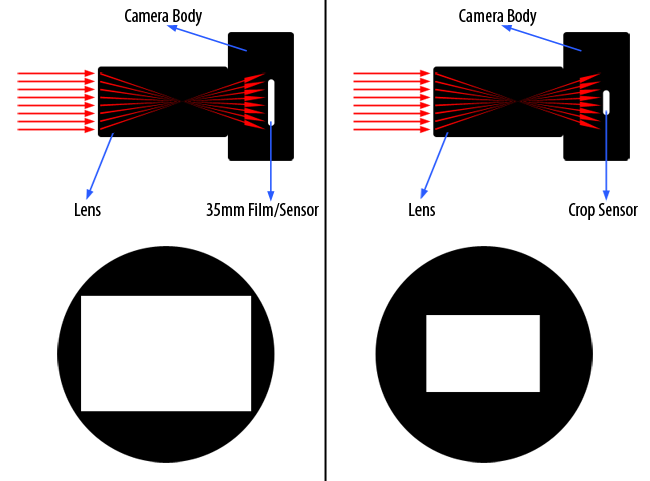

Whether you mount a full-frame lens on a full-frame, APS-C, Micro Four Thirds or 1″ CX camera, the physical properties of the same lens never change – its focal length and aperture stay constant. This makes sense, as the only variable that is changing is the sensor. So, those that say that “a 50mm f/1.4 lens is a 50mm f/1.4 lens no matter what camera body it is attached to” are right, but with one condition – it must be the same lens (more on this below). The only thing that can change the physical properties of a lens is another lens, such as a teleconverter. Remember, focal length is the distance from the optical center of the lens focused at infinity to the camera sensor / film, measured in millimeters. All that happens as a result of a smaller image format / sensor is cropping, as illustrated in the below image:

If I were to mount a 24mm full-frame lens on an APS-C camera to capture the above shot, I would only be cutting off the corners of the image – not getting any closer physically. My focal length does not change in any way. It is still a 24mm lens. In terms of equivalent focal length, the resulting crop would give me a narrower field of view that is equivalent to what a 36mm lens would give on a full-frame camera. However, the key word here is “field of view”, as that’s the only thing that differs. This is why I prefer to use the term “equivalent field of view”, rather than “equivalent focal length”, as there is no change in focal length.

If you were to try a quick experiment by taking a full-frame lens and mounting it on a full-frame camera, then mounting the same lens on different camera bodies with smaller sensors using adapters (without moving or changing any variables), you would get a similar result as the above image. Aside from differences in resolution (topic discussed further down below), everything else would be the same, including perspective and depth of field (actually DoF can be different between sensor sizes, see references to DoF below). So the background and foreground objects would not appear any closer or further away, or look more or less in focus. What you would see is in-camera cropping taking place, nothing more.

The above is a rather simplified case, where we are taking a full-frame lens with a large image circle and mounting it on different cameras with smaller sensors using adapters. Without a doubt, the results will always be the same with the exception of field of view. However, that’s not a practical case today, since smaller sensor cameras now have smaller lenses proprietary to their camera systems and mounts. Few people use large lenses with smaller formats than APS-C, because mount sizes are different and they must rely on various “smart” or “dummy” adapters, which unnecessarily complicate everything and potentially introduce optical problems. Again, there is no point in making large lenses for all formats when the larger image circle is unused. When manufacturers make lenses for smaller systems, they want to produce lenses as small and as lightweight as possible. So when new interchangeable lens camera systems were introduced from manufacturers like Sony, Fuji, Olympus, Panasonic and Samsung, they all came with their “native” compact and lightweight lenses, proprietary to their lens mounts.

5) ISO and Exposure / Brightness

In film days, ISO stood for the sensitivity of film. If you shot with ISO 100 film during daylight and had to move to low light conditions, you had to change out film to a higher sensitivity type, say ISO 400 or 800. So traditionally, ISO was defined as “the level of sensitivity of film to available light”, as explained in my article on ISO for beginners. However, digital sensors act very differently than film, as there is no varying sensitivity to different light. In fact, digital sensors only have one sensitivity level. Changing ISO simply amplifies the image signal, so the sensor itself is not getting any more or less sensitive. This results in shorter exposure time / more brightness, but with the penalty of added noise, similar to what you see with film.

To make it easier for film shooters to switch to digital, it was decided to use the same sensitivity in digital sensors as in film, so standards were written such as the ISO 12232:2006, which guide manufacturers on how exposure should be determined and ISO speed ratings should be set on all camera systems. After-all, ISO 100 film was the same no matter what camera you attached that film to, so it made sense to continue this trend with digital. These standards are not perfect though, as the way “brightness” is determined can depend on a number of factors, including noise. So there is a potential for deviations in brightness between different camera systems (although usually not by more than a full stop).

However, once different sensor sizes came into play, things got a bit more complex. Since the overall brightness of a scene depends on the exposure triangle comprised of ISO, Aperture and Shutter Speed, there are only two variables that can change between systems to “match” brightness: ISO and Aperture (Shutter Speed cannot change, as it affects the length of exposure). As you will see below, the physical size of aperture when looking at “equivalent lenses” in terms of field of view between different formats varies greatly, due to the drastic change in focal length. In addition, sensor performance can also be drastically different, especially when you look at the first generation CCD sensors versus the latest generation CMOS sensors. This means that while the overall brightness is similar between systems, image quality at different ISO values could differ greatly.

Today, if you were to take an image with a full-frame camera at say ISO 100, f/2.8 and 1/500 shutter speed, and took a shot with a smaller sensor camera using identical settings, the overall exposure or “brightness” of the scene would look very similar in both cases. The Nikon D810 (full-frame) at ISO 100, f/2.8, 1/500 would yield similar exposure as the Nikon 1 V3 (1″ CX) at ISO 100, f/2.8, 1/500. On one hand this makes sense, as it makes it easy for one to reference exposure settings. But on the other hand, the way brightness is yielded is different – and that brings a lot of confusion to the already confusing topic. Yes, exposure values might be the same, but the amount of transmitted light might not! The big variable that differs quite a bit across systems is the lens aperture, specifically its physical size. Although the term aperture can mean a number of different things (diaphragm, entrance pupil, f-ratio), in this particular case I am referring to the physical size, or the aperture diameter of a lens as seen from the front of the lens, also known as the “entrance pupil”. The thing is, a full frame lens will have a significantly larger aperture diameter than an equivalent lens from a smaller system. For example, if you compare the Nikkor 50mm f/1.4G lens with say the Olympus 25mm f/1.4 (50mm equivalent field of view relative to full-frame), both will yield similar brightness at f/1.4. However, does it mean that the much smaller Olympus lens is capable of transmitting the same amount of light? No, absolutely not. It just physically cannot, due to the visibly smaller aperture diameter. Let’s take a look at the math here.

6) Aperture and Depth of Field

Since the f number (in this case f/1.4) represents the ratio between the focal length of the lens and the physical diameter of the entrance pupil, it is easy to calculate the size of the aperture diameter on the Nikkor 50mm f/1.4G. We simply take the focal length (50mm) and divide it by its maximum aperture of f/1.4. The resulting number is roughly 35.7mm, which is the physical size of the aperture diameter, or the entrance pupil. Now if we look at the Olympus 25mm f/1.4 lens and apply the same math, the aperture diameter turns out to be only 17.8mm, exactly twice less! So despite the fact that the two lenses have the same f-number and cover similar fields of view, their aperture sizes are drastically different – one transmits four times more light than the other.

Let’s take this a step back and understand why we are comparing a 50mm to a 25mm lens in the first place. What if we were to mount the Nikkor 50mm f/1.4G lens on a Micro Four Thirds camera with an adapter – would the light transmission of the lens be the same? Yes, of course! Again, sensor size has no impact on light transmission capabilities of a lens. In this case, the 50mm f/1.4 lens remains a 50mm f/1.4 lens whether it is used on a full-frame camera or a Micro Four Thirds camera. However, what would the image look like? With a drastic “crop”, thanks to the much smaller Micro Four Thirds camera that has a 2.0x crop factor, the field of view of the 50mm lens would make the subject appear twice closer, as if we were using a 100mm lens, as illustrated in the below image:

As you can see, the depth of field and the perspective we get from such a shot would be identical on both cameras, given that the distance to our subjects is the same. However, the resulting images look drastically different in terms of field of view – the Micro Four Thirds image appears “closer”, although it is really not, as it is just a crop of the full-frame image (a quick note: there is also a difference in aspect ratio of 3/2 vs 4/3, which is why the image on the right is taller).

Well, such tight framing as seen on the image to the right is typically not desirable to photographers, which is why we tend to compare two different systems with an equivalent field of view and camera to subject distance. In this case, we pick a 50mm full-frame lens versus a 25mm Micro Four Thirds lens for a proper comparison. But the moment you do that, two changes take place immediately: depth of field is increased due to change in focal length, and background objects will appear less blurred due to not being as enlarged anymore. Do not associate the latter with bokeh though – objects will appear less enlarged because of physically smaller aperture diameter. If you have a hard time understanding why, just do quick math with a 70-200mm f/2.8 lens. Did you ever wonder why at 200mm the background appears more enlarged compared to 70mm? No, it is not depth of field to blame for this, not if you frame the subject the same way! If you stand 10 feet away from your subject and shoot at 100mm @ f/2.8, the aperture diameter equals 35.7mm (100mm / 2.8). Now if you double the distance from your subject by moving back 20 feet and shoot at 200mm @ f/2.8, your aperture diameter / entrance pupil is now significantly bigger, it is 71.4mm (200mm / 2.8). As a result of this, the larger aperture diameter at 200mm will actually enlarge the background more, although depth of field remains exactly the same. That’s why shooting with a 70-200mm f/2.8 lens yields aesthetically more pleasing images at 200mm than at 70mm! Some people refer to this as compression, others call it background enlargement – both mean the same thing here.

A quick note on compression and perspective: it seems like people confuse the two terms quite a bit. In the above example, we are changing the focal length of the lens from 70mm to 200mm, while keeping the framing the same and the f-stop the same (f/2.8). When we do this, we are actually moving away from the subject that we are focusing on, which triggers a change in perspective. Perspective defines how a foreground element appears in relation to other elements in the scene. Perspective changes not because of a change in focal length, but because of a change of camera to subject distance. If you do not move away from your subject and simply zoom in more, you are not changing the perspective at all! And what about compression? The term “compression” has been historically wrongly associated with focal length. There is no such thing as “telephoto compression”, implying that shooting with a longer lens will somehow magically make your subject appear more isolated from the background. When one changes the focal length of a lens without moving, all they are doing is changing the field of view – the perspective will remain identical.

In this particular case, how closely background objects appear relative to our subject has nothing to do with how blurry they appear. Here, blur is the attribute of the aperture diameter. If you are shooting a subject at 200mm f/2.8 and then stop the lens down to f/5.6, the background elements will appear smaller, because you have changed the physical size of the aperture diameter. Your depth of field calculator might say that your DoF starts at point X and ends at point Y and yet the background that is located at infinity will still appear less blurry. Why? Again, because of change in aperture diameter. So going back to our previous example where we are moving from 70mm f/2.8 to 200mm f/2.8, by keeping the framing identical and moving away from the subject, we are changing the perspective of the scene. However, that’s not the reason why the background is blurred more! The objects in the background appear larger due to change in perspective, however, how blurry they appear is because I am shooting with a large aperture diameter. Now the quality of blur, specifically of highlights (a.k.a. “Bokeh”) is a whole different subject and that one hugely depends on the design of the lens.

Going back to our example, because of the change in aperture diameter and focal length, you will find things appearing more in-focus or less blurry than you might like, including objects in the foreground and background. Therefore, it is the shorter focal length, coupled with the smaller aperture diameter that make things appear less aesthetically pleasing on smaller format systems.

At this point, there are three ways one could effectively decrease depth of field and enlarge the out of focus areas in the background:

- Get physically closer to the subject

- Increase the focal length while maintaining the same f-stop

- Use a faster lens

Getting physically closer to the subject alters the perspective, resulting in “perspective distortion“, and increasing focal length translates to the same narrow field of view issue as illustrated in the earlier example, where you are too close to the subject.

It is important to note that any comparisons of camera systems at different camera to subject distances and focal lengths are meaningless. The moment you or your subject move and the focal lengths differ, it causes a change in perspective, depth of field and background rendering. This is why this article excludes any comparisons of different formats at varying distances.

Neither of the two options above are usually workable solutions, so the last option is to get a faster lens. Well, that’s where things can get quite expensive, impractical or simply impossible. Fast aperture lenses are very expensive. For example, the excellent Panasonic 42.5mm f/1.2 Micro Four Thirds lens costs a whopping $1,600 and behaves like an 85mm f/2.5 lens in terms of field of view and depth of field on a full-frame camera, whereas one could buy a full-frame 85mm f/1.8 lens for one third of that. Manual focus f/0.95 Micro Four Thirds lenses from a number of manufacturers produce similar depth of field as an f/1.9 lens, so even those could not get close to f/1.4 aperture on full-frame (if you find the aperture math confusing, it will be discussed further down below).

You have probably heard people say things like “to get the same depth of field as a 50mm f/1.4 lens on a full-frame camera, you would need a 25mm f/0.7 lens on Micro Four Thirds camera”. Some even question why there is no such lens. Well, if they knew much about optics, they would understand that designing an f/0.7 lens that is optically good and can properly autofocus is practically an impossible job. That’s why such fast lenses with AF capabilities will most likely never exist for any system. Can you imagine how big such a lens would look like?

This all leads to the next topic – Aperture Equivalence.

7) Aperture Equivalence

In my previous example, I mentioned that the Panasonic 42.5mm f/1.2 Micro Four Thirds lens is equivalent to an 85mm f/2.5 full-frame lens in terms of light transmission capabilities. Well, it makes sense if one is to look at the aperture diameter / entrance pupil of both lenses, which roughly measure between 34mm and 35mm. Because such lenses would transmit roughly the same amount of light, yield similar depth of field and have similar field of view, some would consider them to be “equivalent”.

As a result of the above, we now we have people that are saying that we should be computing equivalence in terms of f-stops between different systems, just like we compute equivalence in field of view. Some even argue that manufacturers should be specifying equivalent aperture figures in their product manuals and marketing materials, since giving the native aperture ranges is lying to customers. What they do not seem to get, is that the manufacturers are providing the actual physical properties of lenses – the equivalent focal lengths are there only as a reference for the same old reasons that existed since film days, basically to guide potential 35mm / full-frame converts. Another key fact, is that altering the f-stop results in differences in exposure / brightness. The same Panasonic 42.5mm f/1.2 at f/1.2 will yield a brighter exposure when compared to an 85mm f/2.5 full-frame lens, because we are changing one of the three exposure variables.

So let’s get another fact straight: smaller format lenses have exactly the same light gathering capabilities as larger format lenses at the same f-stop, for their native sensor sizes. Yes, larger aperture diameter lenses do transmit more light, but more light is needed for the larger sensor, because the volume and the spread of light also must be large enough to cover the bigger sensor area. The Panasonic 42.5mm f/1.2 may behave similarly to an 85mm f/2.5 lens in terms of aperture diameter / total light transmission, field of view and depth of field, but the intensity of light that reaches the Micro Four Thirds sensor at f/1.2 is very different than it is for an f/2.5 lens on a full-frame camera – the image from the latter will be underexposed by two full stops. In other words, the intensity of light that reaches a sensor for one format is identical to the intensity of light that reaches a sensor of a different format at the same aperture. It makes no sense to make a Micro Four Thirds lens that covers as big of an image circle as a full-frame lens, if all that extra light is wasted. Plus, such lenses would look ridiculously big on small cameras.

It is important to note that although the comparison above is valid technically, a larger sensor would yield cleaner images and would allow for faster and less expensive lenses, as pointed out earlier.

8) Total Light

“Equivalence” created another ugly child: total light. Basically, the idea of total light is that smaller sensors get less total light than larger sensors just because they are physically smaller, which translates to worse noise performance / overall image quality. For example, a full-frame sensor looks two stops cleaner at higher ISOs than say Micro Four Thirds, just because its sensor area is four times larger. I personally find the idea of “Total Light” and its relevance to ISO flawed. Explaining why one sensor has a cleaner output when compared to a smaller one just because it is physically larger has one major problem – it is actually not entirely true once you factor in a couple of variables: sensor technology, image processing pipeline and sensor generation. While one cannot argue that larger sensors do physically receive more light than their smaller counterparts, how the camera reads and transforms the light into an image is extremely important. If we assume that the physical size of a sensor is the only important factor in cameras, because it receives more total light, then every full-frame sensor made to date would beat every APS-C sensor, including the latest and greatest. Consequently, every medium format sensor would beat every full-frame sensor made to date. And we know it is not true – just compare the output of the first generation Canon 1DS full-frame camera at ISO 800 to a modern Sony APS-C sensor (have a peek at this review from Luminous Landscape) and you will see that the the latter looks better. Newer sensor technologies, better image processing pipelines and other factors make modern sensors shine when compared to old ones. Simply put, newer is better when it comes to sensor technology. APS-C has come far along in terms of noise performance, easily beating first generation full-frame sensors in terms of colors, dynamic range and high ISO performance. CMOS is cleaner at high ISO than old generation CCD that struggled even at ISO 400! Until recently, medium format cameras used to be terrible at high ISOs due to use of CCD sensors (which have other strengths). But if we look at “total light” only from the perspective of “bigger is better”, then medium format sensors are supposed to be much better than full-frame just because their sensor sizes are bigger. Looking at high ISO performance and dynamic range of medium format CCD sensors, it turns out that it is actually not the case. Only the latest CMOS sensors from Sony made it possible for medium format to finally catch up with modern cameras in handling noise at high ISOs.

My problem with “total light” is that it is based on the assumption that one is comparing sensors of the same technology, generation, analog to digital conversion (ADC), pixel size / pitch / resolution, RAW file output, print size, etc. And if we look at the state of the camera industry today, that’s almost never the case – sensors differ quite a bit, with varying levels of pixel size and resolution. In addition, cameras with the same sensors could potentially have different SNR and dynamic range performance. The noise we see on the Nikon D4s looks different than on the Nikon D810, the Canon 5D Mark III or the Sony A7s, even when all three are normalized to the same resolution…

So how can one rely on a formula that assumes so much when comparing cameras? The results might be mostly accurate given the state of the camera industry today (with a few exceptions), so it is one’s individual choice if “mostly good enough” is acceptable or not. Total light is only true if you are looking at cameras like Nikon D800 and D7000, which have the same generation processors and same pixel-level performance. In all other cases, it is not 100% safe to assume that a sensor is going to perform relative to its physical size. Smaller sensors are getting more efficient than larger sensors and bigger is not always better when you factor in size, weight, cost and other factors. In my opinion, it is better to skip such concepts when comparing systems, as they can potentially create a lot of confusion, especially among beginners.

9) Circle of Confusion, Print Size, Diffraction, Pixel Density and Sensor Resolution

Here are some more topics that will give you headache quickly: circle of confusion, print size, diffraction, pixel density and sensor resolution. These five bring up additional points that make the subject of “equivalence” truly a never-ending debate. I won’t spend a whole lot of time on this, as I believe it is not directly relevant to my article here, so I just want to throw a couple of things at you to make you want to stop reading this section. And if your head hurts already, just move on and skip all this junk, since it really does not matter (actually, none of the above really matters at the end of the day, as explained in the Summary section of this article).

9.1) Circle of Confusion

Every image is made out of many dots and circles, because light rays reaching the film / sensor are always in circular shape. These circular shapes or “blur spots” can be very small or very large. The smaller these blur spots are, the more “dot-like” they appear to our eyes. Basically, circle of confusion is better defined by Wikipedia as “the largest blur spot that will still be perceived by the human eye as a point”. Any part of an image, whether printed or viewed on a computer monitor that appears blurry to our eyes is only blurry because we can tell that it is not sufficiently sharp. When you get frustrated with taking blurry pictures, it happens because your eyes are not seeing enough details, so your brain triggers a response “blurry”, “out of focus”, etc. If you had bad vision and could not tell a difference between a sharp photo and a soft / blurry one, you might not see what others can. That’s why the subject of circle of confusion is so confusing – it does not take into account that your vision could be below “good”, with the ability to resolve or distinguish 5 line pairs per millimeter when viewing an image at a 60° angle and viewing distance of 10 inches (25 cm). So the basic assumption is that the size of the circle of confusion, or the largest circular shape that you still perceive as a dot, is going to be approximately 0.2mm based on the above-mentioned 5 lines per millimeter assumption (a line every fifth of a millimeter equals 0.2mm). What does this have to do with equivalence, you might ask? Well, it affects it indirectly, because it is closely tied to print size and a few other things.

9.2) Print Size and Sensor Resolution

Believe it or not, but most camera and sensor comparisons we see today directly relate to print size, as strange as it may sound! Why? Because it is automatically assumed that we take pictures in order to produce prints, the final end point of every photograph. Now the big question that comes up today, which probably sparks as many heated debates as the subject of equivalence, is “how large can you print”. This is where circle of confusion creates more confusion, because how big one can print hugely depends on what they deem “acceptable” in terms of sharpness perception at different viewing distances. If you listen to some old timers that used to or still shoot 35mm film, you will often hear them say that resolution or sharpness are not important for prints at all and that they used to print huge 24×36″ or 30×40″ prints (or larger) using 35mm film, which looked great. You will probably hear a similar story from early digital camera adopters, who will be keen to show you large prints in their living rooms from cameras that only had 6-8 megapixels. At the same time, you will also come across those, that will be telling you all about their super high resolution gigapixel prints that are more detailed than what your eyes can distinguish, telling you how life-like and detailed their prints look.

Who is right and who is wrong? Well, that’s also a very subjective opinion that will create heated debates. Old timers will laugh at the high resolution prints, telling you that you would never be looking at them that close anyway, while others will argue that a print must be very detailed and should look good at any distance to be considered worthy of occupying your precious wall space. And successful photographers like Laura Murray, who almost exclusively shoot with film will be selling prints of scanned film like this at any size their clients want, while some of us will still be debating about which camera has the best signal to noise ratio:

A big spoiler for pixel-peepers – there is not a whole lot in terms of details in such photographs. Film shooters working in fast-paced environments like weddings rarely care about making sure that the bride’s closest eye looks perfectly sharp – they are there to capture the moment, the mood, the environment. Very few film shooters will be busy giving you a lecture on circle of confusion, resolution, diffraction or other non-relevant, unimportant (for them) topics. So who is right?

No matter which side you are on, by now you probably do recognize the fact that the world is moving towards more resolution, larger prints and more details. In fact, manufacturers are spending a lot of their marketing dollars on convincing you that more resolution is better with all the “retina” displays, 4K TVs and monitors. Whether you like it or not, you are most likely already sold on it. If you are not, then you represent a small percentage of the modern population that does not craze after more megapixels and gigapixels.

In fact, if you have been on the web long enough, you probably remember how the early days of the web used to look like, with tiny thumbnail-size images that looked big on our 256 color VGA screens. We at Photography Life do recognize that the world is moving towards high resolution and many of our readers are now reading the site with their “retina” grade or 4K monitors, expecting larger photographs for their enjoyment. So even if some of us here at PL hate the idea of showing you more pixels and how the new 36 MP sensor is better at ISO 25,600 than the previous generation 36 MP sensor, the world is moving in that direction anyway and there is not much we can do about it.

Let’s get back to our super technical, not-so-important discussion about why print size is dictating our comparisons. Well, considering that printers are limited at how many dots per inch they can print (and that limitation bar is also being raised year after year), the math that is currently applied at how big you should print for an image to look “acceptably sharp” at comfortable viewing distances is anywhere between 240 dots per inch (dpi) and 300 dpi, sometimes accepting certain prints to go down to 150 dpi. Well, if you correlate pixels and dots to 1:1 ratio, how big you can print with say a 16 MP resolution image versus a 36 MP resolution image (assuming that both contain enough detail and sharpness) without enlarging or reducing prints will be simple math – divide horizontal and vertical resolution by the dpi resolution you are aiming for, and you get the size. In the case of a 36 MP resolution image from the Nikon D800/D810, which produces files of 7,360×4,912 resolution, that translates to 24.53×16.37 inches (7360/300 = 24.53, 4912/300 = 16.37). So if you want a good quality print, the maximum you can produce out of a D800/D810 sensor is a 24×16″ print. Now what if we look at the Nikon D4s, which produces only 16 MP files with image resolution of 4,928 x 3,280. Applying the same math, the maximum print size you would get is 16×11″! Oh what a heck, that’s a $6500 camera that can only give you 16×11 versus a $3000 camera that can print much larger? What’s up with that? Well, this is when things get messy, bringing the whole print size debate into big question. But wait a minute, if all that matters for a print size is the damn pixel resolution, what about comparing the Nikon D4s with the Nikon D7000 or Fuji X-T1 that have the same 16 MP sensor / pixel resolution? Ouch, that’s when things get even more painful and confusing, as it is hard for someone to wrap their brain around the concept that a smaller sensor can produce images as big as a large sensor camera. And this is where we get into another can of worms, pixel density.

9.3) Pixel Density

So, we ended the last section with how a print from two different size sensors could yield the same size, as long as their pixel resolution was the same. Well, this is where it all comes together…hopefully. After manufacturers started making smaller sensors (initially for cost reasons as explained in the beginning of this article), they started to realize that there were other benefits to smaller sensors and formats that they could capitalize on. Well, it was basically the same story as Large Format vs Medium Format, or Medium Format vs 35mm Film – the larger you go, the more expensive it gets to manufacture gear. There was a reason why 35mm became a “standard” in the film industry, as not many were willing to spend the money to go Medium Format or larger due to development and print costs, gear, etc. So when APS-C became a widespread format, a number of manufacturers jumped in the mirrorless bandwagon and started to market the idea of going light, versus the big and bulky DSLRs. Within a few years, this “go light” became a trend, almost a movement. Companies like Fuji and Sony even started their anti-DSLR campaigns, trying to educate people not to buy DSLRs and buy smaller and lighter mirrorless cameras instead. It made sense and the campaign is slowly gaining traction, with more and more people switching to mirrorless.

Well, manufacturers realized that if they used the same pixel density on sensors (i.e. how many pixels there are per inch square of sensor surface) it would make the small sensor cameras look inferior, since their sensor surface area is obviously noticeably smaller. So they started pushing more and more resolution on smaller sensors by increasing pixel pitch, which made these smaller sensors appear “equivalent” (by now I hate this term!) to bigger formats. Same old Megapixel wars, except now we are confusing people with specifications that seem to be awfully similar: a $6500 Nikon D4s DSLR camera with 16 MP that is big, heavy and bulky vs a $1700 Fuji X-T1 mirrorless camera with the same 16 MP resolution. Or a camera phone with 41 megapixels on a tiny sensor…

However, despite what may sound like a bad idea, there was actually one big advantage to doing this – at relatively low sensitivities, smaller pixels did not suffer badly in terms of noise and manufacturers were able to find ways to “massage” high ISO images by applying various noise suppression algorithms that made these sensors look quite impressive. So more focus was put on making smaller sensors more efficient than their larger counterparts.

As I have explained in my “the benefits of high resolution sensors” article, cramming more pixels tighter together might sound like a bad idea when you are looking at the image at pixel level, but once you compare the output to a smaller sized print from the same sized sensor camera with less pixels, the down-sampled / resized / normalized image will contain roughly the same amount of noise and its overall image quality will look similar. The biggest advantage in such a situation, is the pixel-level efficiency of the sensor at low ISOs. If a 36 MP camera can produce stunning-looking images at ISO 100 (and it does), people that shoot at low ISOs would get the benefit of larger prints, while those that shoot at high ISOs are not losing a lot in terms of image quality once they resize the image to lower resolution. In a way, it became a win-win situation.

What this means, is that when we deal with modern small sensor cameras, despite having a smaller physical sensor surface area, the large number of smaller pixels essentially “enlarges” images. Yes, once “normalized” to the same print size, smaller sensors will show more noise than their full-frame counterparts, but due to better sensor efficiency and more aggressive noise suppression algorithms, they look quite decent and more than “acceptable” for many photographers.

So if cramming more pixels together has its benefits, why not cram more? Well, that’s essentially what we are seeing with smaller sensor formats – they are cramming more pixels into their sensors. APS-C quickly went from 12 MP to 16 MP, then from 16 MP to 24 MP within the past 2 years and if we look at the pixel efficiency of the Nikon CX and Micro Four Thirds systems, DX could be pushing beyond 24 MP fairly soon (Samsung NX1 is already at 28 MP, thanks to EnPassant for reminding). With such small pixel size, we might be pushing beyond 50 MP on full-frame sensors soon too, so it is all a matter of time.

9.4) Pixel Density, Sensor Size and Diffraction

Now here is an interesting twist to this what-seems-like-a-never-ending debacle: since smaller sensors are essentially “magnified” with smaller pixels, that same circular form in the shape of circle of confusion is also…magnified. So this gave photography geeks yet another variable to add to the sensor “equivalence” – circle of confusion variance. Yikes! People even made up something called a “Zeiss formula” (which as it turns out actually has nothing to do with Zeiss), that allows one to calculate the size of circle of confusion based on the physical sensor size. This has become so common, that such calculations have now been integrated into most depth of field calculators. So if you find yourself using one, look for “circle of confusion” and you will probably find that size for the format you selected. Given that all small sensors do pack more pixels per inch, it is actually safe to assume that the circle of confusion will be smaller for smaller systems, but the actual number might vary, since the calculation is still debated on what it is supposed to be. Plus, “magnification” is relative to the pixel size today – if in a few years we will be using twice smaller pixels on all sensors, those numbers will have to be revised and the formulas will have to be rewritten… Now in regards to diffraction, since diffraction is directly tied to the circle of confusion, if the latter is more “magnified”, then it is also safe to assume that smaller sensors exhibit diffraction at larger apertures. That’s why when you shoot with small format camera systems like Nikon CX, you might start seeing the effect of diffraction at f/5.6, rather than f/8 and above in camera systems with larger sensors.

9.5) High Speed APS-C Cameras

Once photographers started realizing these benefits, the field of view equivalency that we’ve talked about earlier started literally translating to the ability to magnify the subject more and potentially resolve more details. Since the barrier for entry into full-frame is still at around $1500, the introduction of high-resolution APS-C sensor cameras like the Canon 7D Mark II was greeted with a lot of fanfare, sparking heated discussions on advantages and disadvantages of high-speed APS-C DSLR cameras vs full-frame (in fact, many Nikon shooters are still waiting for a direct competitor to the 7D Mark II for this reason). Sadly though, just like the topic of equivalence, these discussions on APS-C vs full-frame lead nowhere, as both parties will happily defend their choices till their death.

You have probably heard someone say that they prefer shooting with cropped sensor cameras due to their “reach” before. The argument that is presented does make sense – a sensor with a higher pixel pitch (or more pixels per inch) results in more resolution and therefore translates to more details in an image (provided that the lens used is of high quality, capable of resolving those details). And higher resolution obviously translates to bigger prints, since digital images are printed at dots per inch – the more the dots, the larger the prints, as I have already explained before. Lastly, higher resolution also allows for more aggressive cropping, which is something that wildlife photographers always need.

If you are interested in finding out what I personally think about all this, here is my take: there are three factors to consider in this particular situation: Cost, Pixel Density and Speed. Take the D800/D810 cameras – 36 MP sensors with similar pixel density as 16 MP APS-C/DX cameras. If the D800/810 cameras offered the same speed as a high-end DX camera (say 8 fps or more) and cost the same as a high-end DX, the high-end DX market would be dead, no argument about it. And if you are to state that the newest generation 24 MP DX cameras have a higher pixel density, well, the moment manufacturers release an FX camera with the same pixel density (50+ MP), that argument will be dead again. Keep in mind, that at the time Nikon produced the D800, it had the same pixel density as the then current Nikon D7000 – so taking a 1.5x crop from the D800 produced a 16 MP image. One could state that the D800 was a D7000 + D800 in one camera body in terms of sensor technology and they would be right. But not in terms of speed – 6 fps vs 4 fps does make a difference for capturing fast action. If Nikon could make a 50+ MP full-frame camera that shoots 10 fps and costs $1800, high-end DX would make no sense whatsoever. But we know that such a camera would be impossible to produce with the current technology, which is why high-end DX is still desired. Now let’s look at the cost reason more closely. Not everyone is willing to drop $7K on a Nikon D4 or a Canon 1D X. But what if a full-frame camera with the same speed as the D4 was sold at $1800? Yup, high-end DX would again be dead. Why do people still want a high-end DX today? Well, looking at the above arguments, it is mostly about cost. All other arguments are secondary.

10) Equivalence is Absurd: CX vs DX vs FX vs MF vs LF

I bet by now you are thinking why in the world you even started to read this article. I don’t blame you, that’s how I felt, except my thought was “why am I even writing this article?” To be honest, I really thought about not publishing it for a while. But after seeing comments and questions come up more and more from our readers, I thought it would be good to put all of my thoughts on this matter in one article. In all honesty, I personally consider the subject of equivalence as absurd as it being confusing. Why are we still talking about equivalent focal lengths, apertures, depth of field, background blur and all other mumbo jumbo, when the whole point of “equivalence” was originally created for 35mm film shooters as a reference anyway? Who cares that 35mm film was popular – why are we still using it as the “bible” of standards? When Medium Format moves into the “affordable” range (which is already kind of happening with MF CMOS sensors and Pentax 645Z), are we going to go backwards in equivalence? By then, we might start seeing Large Format digital!

Let’s get the last fact right: at the end of the day, it all boils down to what works for you. If you only care about image quality, larger will always be better. It will come with weight and bulk, but it will give you the largest prints, the best image quality, paper-thin DoF, beautiful subject isolation, etc. But if your back cannot take it anymore and you want to go lightweight and compact, smaller systems are getting to the point where they are good enough for probably 90% of photographers out there. And if you want to go really small, just take a look at Thomas Stirr’s work on Nikon CX – perhaps it will make you reconsider what your next travel camera should be.

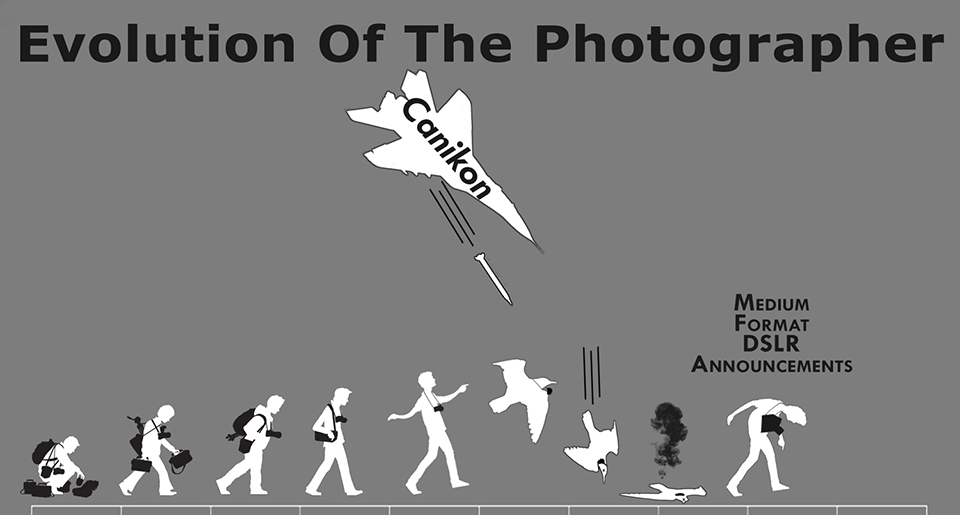

I love how our Bob Vishneski modified Fuji’s “Evolution of the Photographer“, where Fuji wanted to show how nice it is to go lightweight with Fuji’s mirrorless system. Take a look at his version, it will crack you up (sorry Bob, I just could not resist!):

And I loved this quote by our reader Betty, who summed up a lot of what I have said in this article: “As soon as you start using different cameras (!), with different processing engines (!), different sensors (!) and different pixel densities (!), and then start zooming a lens (!) to achieve or compensate for different crops, all bets are off. Your ‘results’ are meaningless”. What a great way to describe what a lot of us are sadly doing.

11) Summary: Everyone is Right, Everyone is Wrong

In all seriousness, let’s just drop this equivalence silliness. It is too confusing, overly technical and unnecessarily overrated. Keep in mind, that as the mirrorless format takes off, we will have a lot more people moving up from point and shoot / phone cameras. They don’t need to know all this junk – their time is better off learning how to use the tools they already have.

Just get over this stupid debate. Everyone is right, everyone is wrong. Time to move on and take some great pictures!

“Therefore, it is the shorter focal length, coupled with the smaller aperture diameter that make things appear less aesthetically pleasing on smaller format systems.”

Whether greater DOF is “less pleasing” is entirely dependent on the subject and the photographer’s vision. Shallow DOF is not appropriate nor ideal for every subject.

A 3mp Samsung compact joke of a camera produced a huge 4ft x 3ft image that has graced my wall for decades and produced admiring comments, but it’s NOT sharp, DOESN’T have a high resolution, but is MASSIVELY atmospheric. If the image truly touches you, then it works.

Not confusing (for me)

I even want to write about subjects that go deeper into the conceptual side of things.

This is also not as absurd of a topic as the writer wants to suggest.

I talked recently with a young man who will be starting to work as a dentist and wants to also take macro shots of teeth and related items, for educational purposes.

He is someone who has used a camera before, who can understand math, and who asked me for advice on what to buy for his work.

I found myself talking about depth of field (which we want deeper, in this case) and how that relates to cropping / cropp-sized sensors, pixel density and all the concepts that many photographers are afraid of perusing.

Anyone who is thinking of investing in a camera system wants their money to go as far as possible (in the direction in which they intend to use the gear)

We need expertise to dispell marketing jargon and educate users to make better buying decisions.

I think that is the phase where we need this the most: before buying.

If you already own equipment, then use it and try to maximise its potential for the kind of work you are doing.

Before buying, you need to understand what a 1:1 magnification ratio means on different sensor sizes.

Yes, it means the same thing regardless of sensor size; a milimiter in real life will be projected onto a milimiter on the sensor, but an object that takes up 40% of the width of the sensor (and therfor the frame) when using a full-frame camera, will take up about 80% of the frame when using a micro-four-thirds camera and a lens with the same magnification.

Somebody needs to explain this to those starting out and that is why we, nerdy minds, are here.

See Everything You Need to Know About Macro Photography by Spencer Cox, particularly the section What Is Magnification?.

photographylife.com/macro…y-tutorial

Cool! Thank you.

I will read that as well.

Thank you for your elaborate article. The sensor size APS-C was based on the new film size APS in the late 80’s- early 90’s. Even Leica made a nice and tiny camera for it. For a short while it was seen as the successor of 35, as it was more versatile.One could choose different formats for instance, it had a very nice possibility for panoramic pictures.

Helaas, it was short lived.

And, don’t forget that MFT is practically the equivalent of 110 film cassettes.

Most of the lenses used today are based on age-old film formats.

Some things just never fade away.

Not confused at all, thank you for taking the time to write this all down. Excellent.

I’ve used 35mm film in the past (Pentax user), Nikon DX (latest the D500), Nikon 1, (J2/J5), MFT (Olympus OM-D EM-1 MKII) and each has it’s benefits. I was really sad Nikon dropped the 1 range, it deserved better, they are great cameras. One day I may move to a larger format sensor, who knows. And who knows, I may even invest in a second hand Nikon film camera and use some the the lenses I have that can be used on it to get that “full frame” experience.

Nasim, I always appreciate your sense of humor. It balances your frustration with this crunchy topic while still clearly communicating your love of photography, which is what makes even a dense article a delight for readers. Thank you!

Before I read this article, I was confused. Now, I’m confused and my head hurts. Thank you?

Seriously, though, thank you for an in-depth article that has convinced me to stop thinking about equivalence. Even if I could determine it exactly, it’s not worth the effort.

love to learn more topics.

This is by far one of the most comprehensive and well articulated articles for a beginning photographer looking to learn their equipment, that I’ve ever read. There is such a natural flow to the way you lay out each point and then connect them together and subsequently differentiate them. For someone new to photography, I’d like to tell you why I’m so appreciative of the research and effort that went into this body of work.

When you are “new the game” or what some people call a Johnny-come-lately LOL, a lot of these terms or perhaps even none of these terms make a ton of sense isolated or in relation to one another. This article did a phenomenal job at helping me understand what it is that I have, how to best utilize it, how to understand what it is that I’m looking at when I’m purchasing items, and what not to get hung up on so that I can just get out there and shoot!

It’s been a while since I’ve felt like a forum earned my subscription to their content, whatever it may be so bravo to you, well done!

And as I’m writing this I’m just realizing that you’re located in Denver Colorado where I am! That’s awesome! I’ll have to see if you have any upcoming classes! Cant wait to take a look around the site!

This was just what I needed, Thanks Nasim!

Sensors should be interchangeable like old film rolls. We should be able to try the sensor we want. Whichever sensor we like, we get its top models so

It is almost 2019 now and I have these thoughts:

1. Nobody prints anymore

2. Video is more engaging

3. Any camera even a cell phone camera can take 8 or more megapixels

Because of those factors, I don’t think advances in megapixels matters much.

Also, with 4K video, technically you can grab an 8MP frame anytime you want.

With Olympus cameras having sensor shift to create a multiple shot merged higher megapixel image, you don’t even need a better sensor.

You can even do that with a much lower resolution sensor: stack and merge them in Photoshop for less noise, higher detail and potentially use 4K frame grabs, too.

The argument against this is that there will be movement in the frames, but you can counter it with shots taken at maximal fps.

Besides, most high resolution images are mostly static, either landscape or for art/advertisement.

We are in the golden age of photography (because equipment is so good) yet nobody knows it.

All that being said, I have an A7r MkI with awesome resolution, but also have a GX85 with 14-140 lens for 4k video and stacked 16MP images that comes close. And I print 30×40, too.