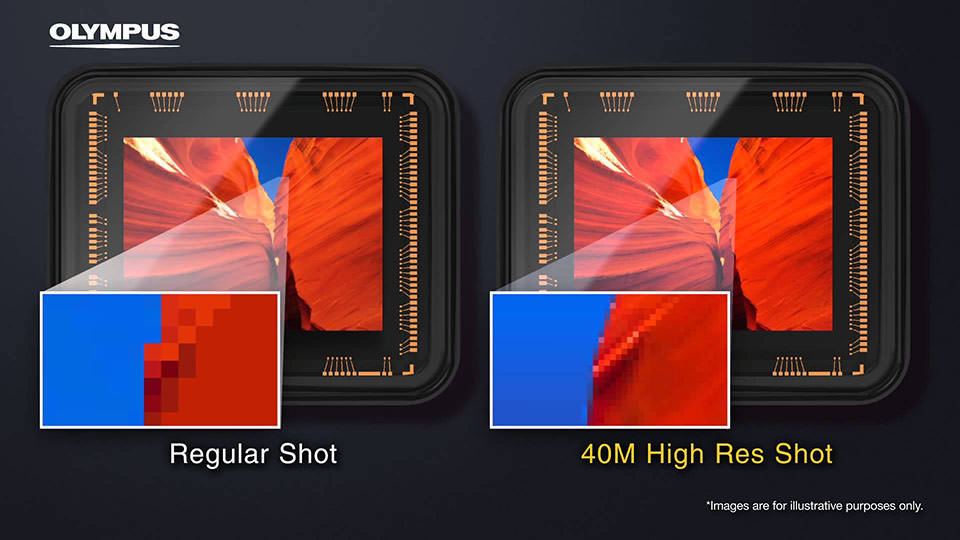

Olympus definitely deserves high praises for its in-body image stabilization (IBIS) system in its OM-D E-M5 II mirrorless camera to shift its sensor in order to create multiple images, then merge them together to create one super high-resolution image. Thanks to this technology, the OM-D E-M5 II, which has a native resolution of 16 MP can shoot large 40 MP images. At first, this may sound like a marketing gimmick, but if you take a close look at how Olympus accomplishes this, you will be amazed by the technology. Being able to shift the sensor opens up a lot of opportunities, and if DSLR manufacturers implement this technology (which Pentax already has, with its K-3 II) and find ways to do it quickly and smoothly, it can seriously change the way we look at resolution. Let’s take a look at this technology in a little more detail and see its advantages and disadvantages.

The way Olympus implemented its high resolution mode is by shifting its sensor by half a pixel in a clockwise direction, creating a total of eight 16 MP images, which it automatically captures in a sequence using its electronic shutter (you don’t hear any shutter actuations in between). Those eight images are then stitched together to form a single 40 MP image. Very clever and cool technology for sure!

Table of Contents

High Resolution Mode: More Details

Although this technique requires one to use a tripod and have a scene without much movement (or moving parts might not properly stitch afterwards), there are some serious advantages to this high resolution mode or sensor shift. First, you certainly do get more overall resolution and details. Take a look at the below image:

Let’s now take a center crop from the image:

And here is the crop from the same area of the 40 MP image:

Obviously, that’s a pretty big difference between the two. But the high resolution shot seems to have less detail than the normal shot. So the big question is, does it actually contain more details? What if we were to take the first crop, up-sample / resize it to match the same 40 MP resolution? Let’s take a look:

Whoops, it is pretty clear that the up-sampled image looks pretty bad in comparison – everything looks bigger and there are no fine details. So it is pretty clear that the high resolution mode is in fact giving us a lot more details!

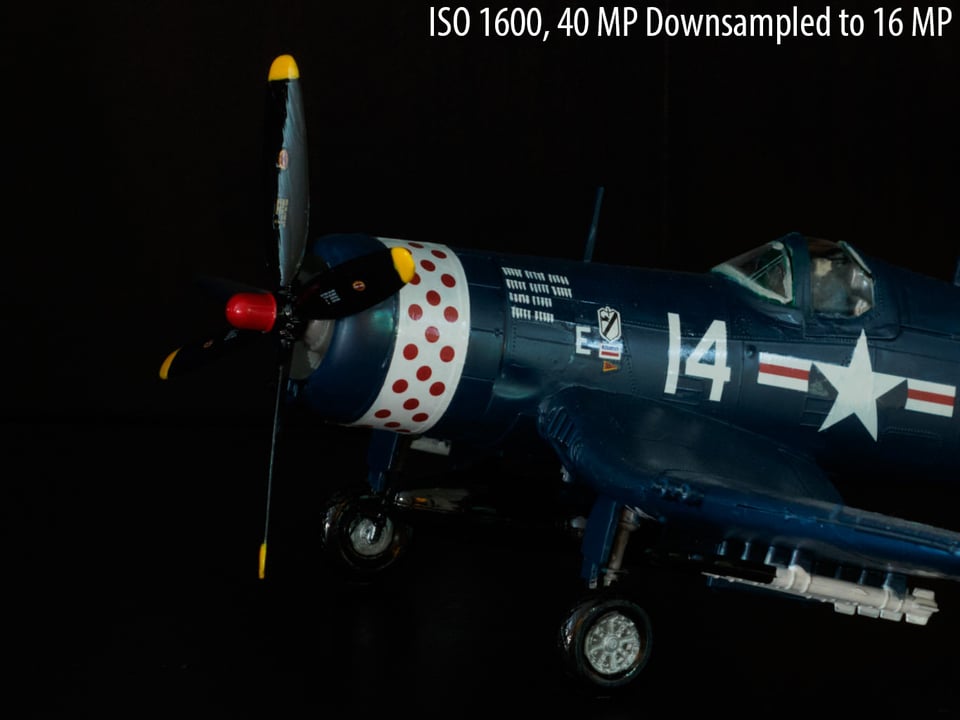

Now what if go the other way – take the high resolution image and down-sample it to 16 MP? Let’s take a look at the details:

If you look really closely and compare it to the first crop, you will see more details. However, the difference is not that huge, since the camera is already doing a decent job at resolving plenty of details at its native resolution. However, down-sampling does have one huge advantage – it reduces noise.

Down-sampling High Resolution Images: Noise

What does a 40 MP resolution image look like at ISO 3200, when it is down-sampled to 16 MP and compared to a 16 MP native resolution file? Let’s take a look (I intentionally made the image darker to show noise in the shadows):

When you open the above images at 100% and view them on your computer, you will see pretty drastic differences in the way noise appears in both images. At its native resolution of 16 MP, the camera shows clear signs of noise all over the image, especially in the shadows. The second image captured at 40 MP and down-sampled to 16 MP shows a drastic difference – noise is practically absent everywhere, showing a very clean image. I cannot accurately estimate the difference between these two images in stops, but the down-sampled image at ISO 1600 looks really good, at least as good as ISO 800.

Another great advantage of this mode, is that you are not working with stitched JPEG images – the camera can capture scenes in high resolution mode using the RAW format, so you still have the full flexibility to work with those files.

Unfortunately, there is a limitation to the high resolution mode on the OM-D E-M5 – you can only go as high as ISO 1600, most likely due to noise patterns potentially affecting images and stitching issues at higher ISOs. Still, this is really impressive!

Pitfalls

As I have already mentioned above, using the sensor shift mode does require a few disciplines, some of which can be looked as pitfalls. First of all, you need to use a tripod and your setup has to be very stable. If there is any potential for camera shake, images will not stitch properly. Take a look at the below image, which I attempted to capture in high-res mode hand-held:

Clearly, that did not work. Another issue is potential movement in the scene while images are being captured – if you have moving trees, bushes, water, etc., the final image might not look right due to stitching errors.

Conclusion

As you can see from the above study, the new high resolution mode using sensor shift technology has a huge potential. Not only does it allow for more details in a scene, but it can also be used to potentially reduce noise at higher ISOs, thanks to the down-sampling advantages. In addition, you can control how much detail you want to see in your final images by applying some smart down-sampling and sharpening techniques.

Now think about what this would mean if Nikon implemented this on the D810 – suddenly 36 MP would look puny in comparison :)

There’s been a software program for several years now that allows you to do this with ANY camera. Yes Nasim, even the D810.;-) What’s interesting about it is that it CAN’T be used on a tripod, you need to handhold your shots to create slight shifts in the images, the exact opposite of the in-camera processes like this one. The program is called PhotoAcute Studio, you can get more info on it here:

photoacute.com/studio/

the problem with that is your hand makes random shakes, and the software doesn’t know how much your camera moved after every shot.

It doesn’t matter. The data is averaged out. You can download the software and use it. I forget if it has a trial or simply watermarked results, but either way you can see the results for your self.

Unfortunately the software you cited is no longer supported by PA.

Hmmm, interesting, where did you get that info from? The software as-is does work however.

Good to see other companies joining Hasselblad in this arena. I’ve found that even without this technology, you can get very good results using this technique: petapixel.com/2015/…photoshop/

I’ve tried a few shots like this, and get about 3-5x the resolution of my sensor and 2-4 stops less noise. Perfect for when you don’t have a tripod with you, but still want detail in a still subject.

This technology is indeed interesting, yet it feels like a compensation for the lack of high resolution / large sensor.

A typical use case would be landscape, but what’s the ideal landscape camera? Either a very high res FF or a larger (6×7 / medium format) sensor or film.

I am not aware of direct comparisons between a 36/50 MP (FF) image and this composite, but intuitively the single, high res sensor will outperform this solution.

Of course it is still a nice achievement from Olympus and allows its dedicated users to do what they could not before.

Intuitively it seems to me the Olympus technology will win. Bear in mind they’re actually sub-sampling a hell of a lot more than 40MP to generate their 40MP, including sampling multiple colors at a given position (so they can read multiple colors from many pixels (they take shots at 1/3 pixel increments — this means they have one color info for extreme pixels, two color info for some pixels, and three color info for others). Assuming this works perfectly, this is actually better than 144MP with a typical Bayer mosaic. Of course, it isn’t perfect in practice (camera shake, imprecision in sampling and sensor movement) and of course the lens limits the effectiveness.

This doesn’t mean, you get much higher resolution, since the final image is rendered using interpolation, which inherently involves a low pass filter. Additionally, it strongly depends on f-stop. The theoretical side of this can be read in different publications (some 30 years old) and investigation, here two for example:

philservice.typepad.com/Limit…_Shift.pdf

www.spiedigitallibrary.org/confe…hort?SSO=1

It really depends on what you mean by “much”. I believe the OM-D EM-5 is sampling 9x and then synthesizing 2.5x the pixels, or roughly 1.6x the linear resolution. Now, if your argument is that it won’t be as good as actually having 1.6x the linear resolution — you may be right, but you may also be very wrong. Because normally a bayer filtered photograph is already cheating to get its stated resolution. By oversampling the way they are, Olympus is not only attempting to improve linear resolution, they are also able to compensate for some of the cheating in the original stated resolution.

In simple terms, a camera takes P x D (pixels x depth) bits of data, and outputs P x D x 3 bits of data “interpolated” from the above. Olympus is taking P x D x 9 x 3 bits of data and interpolating it down to P x D x 2.5 x 3 bits of data. We can get into long arguments about the details, but if they simply interpolated 3 frames offset by one whole sensel (thus properly sampling the color at ever pixel) they could seriously outdo the quality of data from a standard sensor (or a foveon) and they’re doing a lot more than that.

Wouldn’t this be more useful to get rid of bayer interpolation and capture the exact color on all 3 channels for each pixel? Creating the same size (16mp) image, but with a lot more detail! (You can see this on sigma cameras with their foveon sensor, its amazing).

Correct me if I’m wrong, but I think a real 40mp sensor should produce a sharper image than using this technique…

You’re right; Hasselblad has already been doing exactly that for some time now: www.imaging-resource.com/news/…0c-multi-s

“By merging multiple frames, the H5D-200c can capture four-shot, 50-megapixel stills with full color at every image location.”

Sigma with Foveon does it as well. But there are always trade-offs. In Foveon’s case it is the higher ISO problems. But a 36+mp bayer still outresolves a 15/16 mp 3-pixel setup.

I’d say limited potential. Same as HDR or CMOS electronic shutters where you have to be wary of movement both in camera and subject. For very specific situations it’s very useful but in general not very practical.

This makes me think. I have a D810 which works darned well at higher ISOs. However if the subject is dark there is a limit to how well that works.

What if I zoom in (say to 70mm or 200 mm) and take a number of images to make a very high res panorama of what I could have taken with one shot at say 24 mm? Would taking that and down rezing it to a 36 mm image that I would have gotten at 24 mm produce a low noise image?

Paul, absolutely! That’s the advantage of using down-sampling and it really does work.

Hey Nasim, just a quick clarification. Will the perspective of two images (single shot 24mm and several 70mm images stitched to get same FOV) be the same? Intuitively, the perspective is the sole function of focal length for a given sensor size. So I guess not. Please correct me if I am wrong.

Vaibhav, we have had numerous discussions on this. Perspective does not change with focal length – only a change in camera to subject distance does.

I think good high-ISO performance cannot be counted as an advantage because you need a tripod anyway and if you have a tripod you can use lower speeds by decreasing shutter speed.

If the camera was able to take 8 shots handheld, then that would be something :) Let’s see if K3II can do this.

Ertan, true, but I was primarily looking at that as another potential, particularly when the feature becomes available when shooting hand-held (I think it is just a matter of time). Not sure about the K-3 II, but it would be great if that was an option, hopefully without engaging the mirror and the shutter.

Hi Nasim, great article as usual but maybe one correction is required. Ricoh beat Olympus in using this type of technology by about 15 years.

www.macworld.com/artic…/rdc7.html

Of note:

“The RDC-7 has three “Pro Mode” options, all based on Ricoh’s Image Enhancement Technology. Pro-L mode shifts the CCD by one pixel to take two shots that a Ricoh-developed algorithm composes into one image. This boosts resolution and definition by 20 percent without increasing image size. The default Pro Mode uses a Ricoh-developed interpolation algorithm that eliminates “jaggies” when the output resolution increases to 7 megapixels.”

Sam, thank you for pointing it out – had no idea that Ricoh was the first to use this technology. I went ahead and removed a couple of words from the first sentence.

Now it makes me wonder why Ricoh has not implemented sensor shift on every camera. Makes a lot of sense to do it!

Funnily enough I wasn’t aware of it either until I watch a review of the K3 in which they mentioned the use of small shifts in the sensor (utilising IBIS system) to ‘fake’ the effects of an anti-aliasing filter (which the K3 lacks of course). This got me thinking about using that pixel shift to create a higher resolution image. Seeing Ricoh had recently (at the time) acquired Pentax I did a bit of a search for this technology use for that purpose and surely enough there it was, fifteen years ago! even before the megapixel wars began.

It looks like JVC was doing this at around the same time as Ricoh too: www.us.jvc.com/archi…gc_qx5hdu/ (I believe this camera came out in 2001).

I didn’t know that until looking it up, but I did know (and suspect that many readers are already aware too) that Hasselblad has also been doing this since 2011 with their “Multishot” medium-format cameras. The current version uses a 50-MP sensor to put out a 200-MP image. It can also shift the sensor to provide a 50-MP with full-color information at every pixel.