Depth of field causes more confusion among photographers — beginners and otherwise — than nearly any other topic out there. Many “common knowledge” tips about depth of field have some flaws, or are at least partially inaccurate. At a personal level, it took me far too long to separate the good suggestions from the bad, and I eventually realized that I had been relying upon some erroneous information for years without knowing better. My goal with this article is not to make the most controversial possible statements, or needlessly poke holes in things that are almost entirely true. Instead, my hope is to cover some of the basic, common inaccuracies that you may have heard about depth of field, in case you’ve been relying on faulty information for your own photography.

Table of Contents

1) Is it True that Depth of Field Extends 1/3 in Front of Your Subject, and 2/3 Behind?

No, this one isn’t true. The 1/3-front, 2/3-behind suggestion is a fairly common one, but it doesn’t play out in practice.

In fact, the front-to-back ratio for depth of field varies wildly depending upon a number of factors. In very specific cases, it’s true that the ratio can be around 1:2 — but, more frequently, it’s something else entirely.

Which factors matter here? There are three: focal length, aperture, and camera-to-subject distance. As you focus closer, use wider apertures, and use longer lenses, the ratio starts to approach 1:1. When you do the opposite, the ratio quickly passes through 1:2, then 1:3, 1:10, 1:100, and onwards to 1:∞. The range where the focus is 1/3 in front of your subject and 2/3 behind (or the range where it’s close to that ratio) is quite thin indeed.

Where does this tip come from, then? My guess is that it started simply enough: There are cases where the depth of field behind your subject is twice as great as the depth of field in front of your subject. And, with certain lenses and apertures, that spot happens to be a very “medium” focusing distance away from your lens — in the range of 3 meters (10 feet). So, it’s not surprising to me that this morphed into a universal 1/3-front, 2/3-behind suggestion. And, it is indeed useful for beginners to know that depth of field takes longer to fall off behind your subject than in front.

Still, it’s quite a narrow window where the ratio is closer to 1:2 rather than 1:1.5, or 1:3, or 1:4, and so on. The ratio 1:2 isn’t some common figure that tends to occur when you focus at “medium” distances. It’s much more of a special case than a generalization.

At such a close focusing distance and wide aperture, as well as with a 105mm medium telephoto lens, the depth of field here falls off in front and behind my focus point at almost exactly the same rate.

2) How Do You Double Your Depth of Field?

It depends. But there is no simple thing you can do to universally double your depth of field for a given photo, so long as you’re defining “double” how most people do, and you’re not calculating polynomial equations in your head.

What about using an aperture that is two stops smaller? Or stepping twice as far away from your subject and refocusing? Or using half the focal length of your current lens?

Nope. None of those things universally double your depth of field, even though you might have heard that they do.

This is easy enough to realize simply by doing a quick thought experiment. Say that you’re using a wide-angle lens, and your depth of field ranges from 1 meter to 15 meters. In this situation, infinity will be almost within your depth of field, but not quite; distant objects are probably only the slightest bit blurry. Still, they aren’t technically sharp enough to count within your depth of field.

In that case, you don’t need to do very much in order to get the farthest objects completely within your depth of field. Simply change your aperture by a fraction of a stop, or use a slightly wider focal length, or step back just a bit and refocus in the same spot.

In all of these cases, a minor change to your settings (focus distance, aperture, or focal length), will increase your depth of field from 14 meters (15 minus 1) to an infinite number of meters. Clearly, that’s more than doubling your depth of field! And, crucially, you don’t need to change your camera settings much in order to accomplish it.

(If you’re wondering about the exact values I used, it’s true that they’re a bit arbitrary. However, to make sure that they were realistic, I used this calculator with a 14mm lens, a subject distance of 2 meters, an aperture of f/5.6, and a 0.015mm circle of confusion. Feel free to use it and play around with your own values.)

That’s why there’s no merit to claims that you can “double your depth of field” by doing one particular thing for any photo. Sometimes, focusing twice as far away will triple your depth of field. Other times, doing exactly the same thing will increase it 10x, 50x, or infinitely. It all depends upon how much depth of field you already have.

3) How Many Variables Affect Depth of Field in a Photo?

Assuming a typical lens, there are three:

- Focal length

- Aperture

- Camera to subject distance (how far away you’re focused)

From time to time, you may hear online that only two variables affect depth of field in a photo: aperture and magnification.

There’s a similar (though slightly less common) argument, too, that two other variables are the only ones that affect depth of field: subject distance and entrance pupil size.

Neither of these claims is technically wrong, but there’s an issue: People who say that depth of field only contains two variables are merging two of the three together. That’s perfectly fine, but the individual components still matter, and they still affect your depth of field.

Magnification merges together focal length and subject distance. (It’s the size of an object’s projection on your camera sensor relative to its size in the real world.)

Entrance pupil size merges together focal length and aperture (focal length divided by f-number).

Most of the time, it doesn’t make things simpler to combine these variables together. No one in the field spends time calculating entrance pupils. The same is true for magnification, unless you’re doing macro photography.

To put it simply, all three components matter — focal length, aperture, and focusing distance. If you change one without compensating by also changing another, you’ll alter your depth of field every time.

4) Do Crop Sensors Have Greater Depth of Field?

This one has a lot of controversy around it, and I don’t want to add to that. The reality is actually quite straightforward.

The short answer is no, crop sensors don’t inherently have more depth of field than large sensors, although it can seem that way — in order to mimic a larger sensor, you’ll have to use wider lenses, which do increase your depth of field. (You also could stand farther back, which again increases your depth of field, although that does alter the perspective of a photo.) But the sensor itself does not directly give you more depth of field.

When it comes down to it, this shouldn’t be too surprising. A crop sensor is like cropping a photo from a larger sensor (ignoring individual sensor efficiency differences and so on). Unless you think that cropping a photo in post-production gives you more depth of field, this shouldn’t cause any confusion (indeed, if you crop a photo and display the final images at the same print size, it’s even arguable that you will see a shallower depth of field in the cropped image, since any out-of-focus regions would be magnified; but now I’ve started diving into a different rabbit hole, and this is a complex discussion for another day).

Still, the claim that small sensors have more depth of field isn’t entirely unfounded. Imagine that you have two cameras — one with a large sensor, and one with a small sensor — as well as a 24mm lens on both. Because the crop sensor will have tighter framing, you might choose to step back or zoom out in order to match what you’d capture with the larger sensor. Both of these options — stepping back or zooming out — do give you more depth of field.

So, the result of using a smaller sensor might indeed be that your photos have more depth of field, if you don’t do anything else to compensate for it. But this is an indirect relationship. The smaller sensor itself is not what causes the greater depth of field; it’s the wider lens or greater camera-to-subject distance.

5) Does the Sharpest Focusing Distance Depend upon Output Size?

No, although it’s a nuanced argument.

Here’s the starting point: If you’re making tiny, scrapbook-sized prints, you have way more leeway in terms of what looks sharp compared to something like a large, 24×36 inch print viewed up close. You won’t notice errors very easily in the small print. Even when the original photo has some major flaws, they won’t be visible if the print is small enough (or far enough away).

But does that mean the sharpest possible focusing distance changes as your print size does? No, not at all.

Indeed, there is only one focusing distance that will provide you with the most detailed possible photo of your subject (or the most overall detail from front to back, if that’s your goal instead). Just because you can get away with focusing on your subject’s nose rather than their eyes, for example, in a small print, doesn’t mean that the “best possible focusing point” is on their nose. Whether you’re printing 4×6 or 24×36, and whether or not you can even see a difference, it’s still technically ideal to focus on their eyes.

Small prints let you mess things up more without noticing a huge effect; that’s very true. But they don’t alter the position of the best focusing point. So, the sharpest focusing distance does not depend upon output size (which is the impression you might get if you follow hyperfocal distance or astrophotography calculators too literally).

6) Do Hyperfocal Distance Charts Take Diffraction into Account?

No.

There are several flaws with hyperfocal distance charts. They don’t consider whether your foreground is nearby or far away (which matters if you want to focus at the proper distance). And, on top of that, they don’t take diffraction into account. They live in a world where f/8 is just as sharp as f/32.

If you’re still using hyperfocal distance charts to focus in landscape photography, you’re missing out on some potential sharpness in your images. It won’t be the difference between a masterpiece and a pile of garbage, but it’s enough that you might save yourself the price of a “sharper” lens by learning the proper technique for your current gear!

7) Should you focus 1/3 of the way into the scene?

I‘m not sure where this myth originated, but it holds no water.

The theory here is that you can get a sharp landscape photo from front to back by focusing 1/3 of the way into a scene — at which point, your foreground and background appear relatively equal in sharpness.

There are two problems here. First, it’s vague. If the farthest element in your photo is a mountain 30 kilometers away, is the “1/3” mark at 10 kilometers away? That would be quite a ridiculous place to focus, since, for all practical purposes, it’s infinity. If you focus at infinity for a landscape photo, you’ll sacrifice foreground detail unnecessarily.

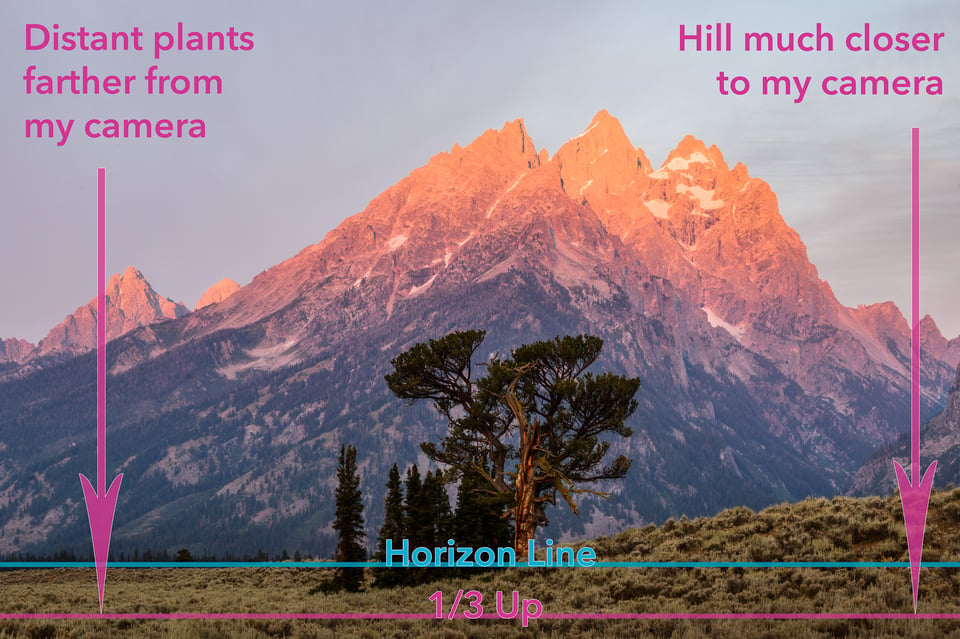

I’ve heard other photographers say that it means 1/3 up the scene, visually speaking — in other words, taking the horizon as the top, and the bottom of your photo as the bottom. But that gets into another issue: The 1/3-up line almost always intersects with elements that are different distances from your camera. So, it still doesn’t tell you anything useful.

In the photo below, for example, would you focus on the nearby hill at the right, or the distant shrubs on the left? They’re both 1/3 up, by this definition:

In short, the 1/3 focus method is confusing to implement, and it’s not accurate. There are better ways to focus in a landscape photo if you want everything to be as sharp as possible.

8) Do Photos Look More Natural When Their Background is Slightly Out of Focus?

It’s an interesting question.

I hear statements like this relatively often: “Personally, for landscape photography, I make sure that my background is slightly softer than the foreground, since it looks more like how our eyes see the world.”

My question: Does it?

If you, personally, like your backgrounds to be slightly softer for aesthetic reasons, go for it. There’s nothing wrong with that decision at all. If you do so, though, keep in mind that it’s a personal creative decision, and not something that necessarily “looks natural” to everyone.

That’s simply because, in the real world, we absolutely have the ability to look in the distance and see a lot of sharpness and detail. Right now, I’m looking out a window at trees more than a mile away, and I can make out individual branches quite well (indeed, better than my camera setup, if I’m using a wide enough lens).

So, no, it’s not inherently natural for the foreground of an image to be sharper than the background. Out-of-focus blur isn’t a particularly strong depth cue to our eyes that something is in the distance.

To demonstrate that point more clearly, I made a quick diagram in Photoshop. This is one of those optical illusions where you can see the figure “popping out” in two different directions. Which direction do you notice first, or most prevalently over time? Do you see the top square at the front, or at the rear?

Personally, when I first look at this diagram, I can’t help but see the top square appearing farther away. Over time, I can flip it in either direction, but it does tend to keep jumping back to the distance. This is despite the fact that it’s the only “in focus” square of the three. Everyone is different, so your mileage may vary; however, in an unscientific survey before publishing this article, I can confirm that six for six saw it this way as well!

If sharpness is such an important cue for telling our brain that an object is nearby, the top square should appear closest for most people, not farthest away. So, what gives?

The answer is that our eyes pick up several depth cues from the real world, and defocus blur in a photograph isn’t one of the big ones. Other depth cues like the height and size of the object in the frame are stronger. Those are the driving forces in the illusion above — not sharpness or blurriness.

Still, I’ll make a couple counterpoints as well.

People, in general, spend a lot of time watching television and movies. So, perhaps our perception depends upon those frequent cues. And in most shows, it’s very common for the background to be slightly blurred (or more) in most scenes, since the focus tends to be on people talking nearer to the camera. There’s no way to rule out the possibility that the same effect could transfer to photos, and create its own depth cue — albeit, not necessarily as strong as others that may exist outside of digital media.

It’s also true that if we look at extremely nearby objects with our own eyes, the background will be clearly out of focus. The same is true if we look in the distance, and there’s something quite close to our eye. So, I could understand an argument that some amount of blur in a photo — foreground or background — can look more natural than perfectly sharp photos with the greatest possible depth of field. Even then, though, our brains always attempt to create a sharp mental map in every direction. Day to day, most people won’t pay attention to out-of-focus blur caused by their own eyes.

To sum it up, this “myth” isn’t as strong or widespread as others out there, but it’s still something you’ll come across. Personally, it is my opinion that landscape photos (or architectural images, and similar) should look sharp from front to back unless you have a separate creative reason not to do so. Other people may have different opinions, and I’m open to changing mine if I see a counterexample where slight blur in the background leads to a more natural look. As a whole, this topic is more about creativity than the technical side of things, which certainly allows for more individual interpretation.

9) What Do You Think of the Merklinger Method of Focusing and Selecting an Aperture?

Especially following my article on the inaccuracies of hyperfocal distance charts, I’ve gotten this question a few times.

I hadn’t heard about the Merklinger method until about a year ago. However, I will emphasize that it has major flaws if you’re using it as a way to capture the sharpest possible photos.

The Merklinger method involves focusing at the farthest object in every photo. If you’ve ever done landscape photography, it should be clear that this technique will make you lose some sharpness, especially if you have nearby foreground elements. By focusing on your farthest subject, you’re throwing away a lot of good depth of field.

Merklinger’s method succeeds at what it aims to do — providing a way to estimate depth of field in an image — but it certainly doesn’t provide a method of capturing maximum sharpness from front to back. Next time you’re out in the field, you can test this by photographing a scene with a nearby foreground. When you focus at infinity, no matter what aperture you use, you’ll get more blur than you would by focusing between the foreground and background.

For this photo, I focused roughly on the corn lilies in the foreground, since they’re double the distance to my nearest element (the grass at the very bottom). If I had focused at infinity, even using f/16, the closest plants in my foreground would have lost significant sharpness. As it is, though, both my foreground and background are quite sharp.

10) Conclusion

Hopefully, this helped shine a light on the depth of field myths that you’ll see so frequently today. This is an important enough subject that accurate information is valuable, even if it isn’t always easy to find. And, of course, some of the tips in this article are suggestions more than pure, mathematical debunking. If you want to have defocused backgrounds, for example, go for it! Photography is all about your own creative vision, and that’s not something for me to determine.

Depth of field is a huge topic, and there certainly may be myths I haven’t covered yet. For space purposes, I also didn’t go into all the little nuances of some of these individual points, since this article already is quite long. So, if you have any questions about depth of field, feel free to let me know in the comments section below. I’ll do my best to answer them, or clarify anything I’ve written above.

I received a bill for 4 tolls. My transponder was in my car. It is NH 24C85. The transponder has been in that car since the day I received it last summer. The bill is invoice #: V000560008583. I should not be paying the $150 and $2 charges per toll. My account number is 27240432.

That is unfortunate, but Photography Life most assuredly is not the website that can help you out with your issue…

This DOF equivalence between camera systems (FF, APSC, MTF) seem to be confusing but actually it is not. I always have the impression that it is explained way to complicated for most people. Taking a photo with 50mm and f1.4 on FF, basically means that I have to divide the focal length by the crop-factor and(!) also have to divide the aperature by the crop-factor for getting the same results on the small sensor (in terms of viewing angle and DOF). I don’t take ISO and shutter speed into account as this simply adds some confusion for most people and wouldn’t change the visual result of the picture in most cases (when having enough light). At the end the camera’s metering system will do the job anyway …

For my example we have:

FF -> APSC -> MTF

50mm -> 35mm -> 25mm

f1.4 -> f0.93 -> f0.7

What it acutually means, you need wider and brighter lenses on ‘Cropped’ sensor system. It’s as simply as this … and easy to understand!

Great Article Spencer.

About your optical illusion: I think for most people it would appear that they see the blurred square ahead because I think most of our (western) brains are wired to read from left to right. It’s where I naturally look first and that is probably what makes my brain to perceive the image back to front (left to right). I suppose you could test this by placing the sharp square bottom left and the blurred out one top right, essentially swapping them. It might just confirm which illusion “pops” first for most people.

Cheers Phil

RE: the 3 squares. The in-focus square is ‘seen’ as the rear one… because most objects are looked down upon…not floating in the air above our heads.

Rich, agreed — that’s what I meant in the article when I said that an object’s height in the frame is a stronger visual cue than the amount of blur.

Thank you. Great info.

I had initially thought that maybe the blurry square looked closer because it appeared bigger. But after reading the comment above, I flipped it over. I found that I could make it flip back and forth a lot easier upside down. If you made the observable size the same (despite the blur) I bet your point would be made even stronger.

Thank you, Roger! Yes, that definitely makes sense. I think the size and height cues are the strongest. Initially, I tried making the squares the same size, but the blurred one started appearing much fainter. That added an issue of its own, so I just reverted to the larger version — but perhaps there is a happy median somewhere in between.

Hi again Spenser.

Great idea to tackle photographic myths – with counter arguments (yours) having the gravitas that we readers can trust.

Suggestion for your next article: the (so called) “triangle of exposure”.

Regards, John M

Thank you, John, happy to hear it, and glad that you enjoyed it!

The “exposure triangle” is a misnomer — and, indeed, the creator called it the “photographic triangle,” which is more accurate — simply because ISO isn’t really a component of exposure. Exposure has to do with the amount of light that you collect, and the only three things that change the amount of light hitting your sensor are your aperture, shutter speed, and scene/flash brightness. Any other variable — ISO, signal to noise ratio, brightness sliders in post production — aren’t “exposure,” by its true definition. Still, they do have a major effect on your final photo, and are all important parts of photography as a whole.

Thx, Spencer – Yes, that’s exactly the myth/misconception I was implying.

I’m suggesting an article that expands on this – ‘cos the myth that changing ISO somehow modifies the “sensitivity” of the camera’s sensor is omnipresent! A search on meaning of ISO will return this (incorrect) definition in about 90% of cases … even from sites one would expect more from.

Regards, J-TKA

That is true, the misinformation is fairly common! In fact, we are currently working to make our own ISO article more accurate in that regard, yet still understandable for beginners. Hopefully you’ll see that update (and some much bigger ones) within the next few months!

Point 8) – the image plays on perspective – very easy for the mind to draw some 45 degree lines and make a sort of cube from the three squares. As a test, flip the image 180 degrees so that the sharp square is bottom left and the fuzziest square is toward top right. Totally different “perspective” in my eyes, and the fuzzy looks just fine at the far back of the “cube”

Yes, my main point there is that people will see whichever square is highest as appearing in the rear. That’s perhaps the strongest visual cue to our brain about how a nonmoving, 2D image is structured. So, if out-of-focus blur has any affect as a depth cue, it’s a small one. It doesn’t surprise me that placing the out-of-focus square at the top also results in the same appearance that it is in the rear. But I think it has more to do with height in the image than being out-of-focus.

Spencer, very informative article. Thank you.

Thank you, Paul, glad that you enjoyed it!

This article relates to a thought I had just this week.

I read the item about the “best black friday deals” and on top of the list was the Nikon D750 + 24-120 f4 combo, which also refered to the review of 24-120 lens.

I own an ASP-C camera with an f2.8 lens.

I understand the numbers are not exactly equivelent, but in terms of depth of field, is using an f4 lens on a FF camera over f2.8 lens on a cropped sensor still makes a noticeable diffrence?

I recommend that you read comment 18.1 that I just left for Pieter if you want a more detailed explanation. However, the short answer is that there won’t be a major difference. Sure, the numbers aren’t totally equivalent (the real numbers would be closer to f/4.3 being equivalent to f/2.8), but that’s quite a minor difference. Unless you compare photos on top of each other, and you’re really looking closely, I wouldn’t consider it relevant as far as depth of field.

And, indeed, if you’re forced to shoot at a higher-than-base ISO (anything above 200 with an aps-c sensor, for the most part) due to light concerns, there is no theoretical difference in image quality between your camera with the f/2.8 lens and a full-frame camera with an f/4.3 lens. The reason is simply that the lost light from f/4.3 on the full-frame camera requires a higher ISO, and the full-frame camera at a higher ISO performs similarly to an aps-c camera at a lower ISO. That’s getting into the nitty-gritty of equivalence though, where many words are necessary to convey small amounts of information, and any slip-up can prove fatal. My comment 18.1 touches on it a bit, but this also is a discussion that requires a more complete explanation to convey its nuances properly :)

Spencer, thank you this clear writing…

I still have a question i always ask myself and where more qualities like diffraction and sensor plays an important role.

What system used ( Lens/ Sensor/ focal length/ aperture) would give the largest DOF at say 10cm?

so a macro photo….

Would that be an iPhone type of camera, a FF or it does not matter at all..?

regards,

Pieter

Pieter, that is a very clever question. At least in theory, the answer is that the sensors would all be exactly the same, since you can (theoretically) perfectly replicate a photo from one sensor to another via equivalence calculations.

Equivalence says, essentially, that an exposure of 1/100 second, f/8, ISO 400, and 100mm on a full-frame sensor will be totally identical to an exposure of 1/100 second, f/4, ISO 100, and 50mm on a micro four-thirds sensor (due to the 2x crop factor). There are many individual sensor concerns that may make this ratio inaccurate — for example, one camera performing better at high ISOs, or having more pixels, or just a more efficient sensor design in general (usually favoring larger sensors by a bit) — but that’s the “theoretical” math behind everything. In a perfect world, if you could equalize the megapixels between the sensors without any consequences, the two photos would look 100% identical.

If you’re shooting at higher ISO values and you need a lot of depth of field, camera sensor size theoretically does not matter. You’ll get identical photos between them all.

However, if you’re shooting at 1/100 second, f/8, ISO 100, and 100mm on a full-frame sensor, that’s not possible to match with a 2x crop-sensor body, since you’d need to use an ISO of 25 (not available on any M43 cameras I know of).

Lenses are also different in different systems. There are 105mm f/1.4 lenses available for full-frame cameras, but no 52.5mm f/0.7 lenses currently made for M43 cameras (again, that I know of). Not that this couldn’t happen (or that you couldn’t use a speed booster like Metabones makes, at least in theory) — it just doesn’t exist yet.

Full-frame sensors tend to give you more flexibility in shallow depth of field for this reason, and they’ll give you better image quality than is possible with a small sensor if you shoot at low ISOs. But if you’re at higher ISO values, and you need as much depth of field as possible, there’s no theoretical difference between — for example — a 24mpx aps-c sensor and a 24mpx full-frame sensor, given the same sensor efficiency. If you want maximum depth of field, it really doesn’t matter what system you choose, assuming that you always shoot significantly higher than base ISO.

As for your question — the best lens, sensor, focal length, and aperture to use for maximum depth of field in macro — it’s trickier to answer than you might think. Although the specific lens and sensor don’t matter, and aperture has an obvious relationship to depth of field, the focal length you pick (as well as the distance you stand from your subject) might have an effect on depth of field in macro. Maybe. It depends upon your interpretation of depth of field.

One answer is that it doesn’t matter, and every focal length will give you an equal depth of field in practice; magnification is what matters. A 200mm lens at 1:1 magnification will have the same depth of field as a 15mm macro lens at 1:1 magnification. In other words, there is no more detail in the background with one lens or the other. A barely-readable out-of-focus line of text in one photo will be just as readable in the other.

The other answer is that the wider lens will have a greater depth of field, even at the same magnification. The reason? Even though just-readable text wouldn’t change between the 15mm and the 200mm lens, the text would be smaller in the background on the 15mm lens. It wouldn’t be blown up into huge bokeh-balls. That means, with the 15mm lens, the background will appear easier to understand and interpret than with the 200mm lens, even though it doesn’t have any actual additional detail. This is easiest to understand simply by Googling macro photos taken at ultra-wide angles. According to one interpretation of depth of field, they don’t have any more than a 200mm lens would. Other people would argue that they do, even at the same magnification and aperture, since it visually appears that they do. As far as I understand it, the answer here isn’t settled. It’s not even a debate that many people are having, just because it isn’t an especially well-known topic. Personally, I fall on the side that because a 15mm macro lens appears to have a greater depth of field (even if it technically doesn’t), you can think of it as having more depth of field. But you won’t technically get clearer background details from using it.

Hopefully, that all makes sense!

Another way of looking at the influence of focal length on DOF (last part of the reply above) is that while the magnification of the subject is the same for the 15 and 200mm lenses, the magnification of faraway details in the background is very different. And because of this, using a long macro lens (the 200mm in this case) you can select a suitable non-distracting small part of the background by slightly moving the camera and use this to isolate the subject, while with the 15mm you would nearly always get a “busy” background that includes everything within a large viewing angle.

In practice one often has to trade DOF (many macro subjects like insects are bigger than the DOF zone at common aperture values) against background isolation, so there is no easy solution. Often the photographer probably wants to concentrate on the macro subject and show as little background detail as possible – which for e.g. butterflies, dragonflies etc. means using a longer lens like 200-400mm on a DSLR. But if you want “environmental” shots (with same magnification of your macro subject) you may want to show as much of the background scene as possible, which means using a WA lens. And there we run into another practical issue: most WA lenses for DSLR (especially WA zooms) have relatively poor image quality for strong closeups. My experience is that small-sensor compacts can give better results for closeups in the WA range, not because of DOF but because the optics have less issues at high magnification (of course assuming that the light levels are sufficient to allow using low ISO on the small sensor camera).

So, there is no final answer, it really depends on what the photographer wants to accomplish with the image.

Yes, exactly! The details in the background vary wildly with a wide-angle versus a telephoto lens. Although the “typical” look is one with a telephoto, that doesn’t mean it’s inherently better. Wide-angles just provide a different appearance, which may or may not suit what the photographer is after.