Sharpening remains a particularly confusing topic among photographers, especially given the tremendous number of post-processing options available. Some post-processing software has so many options that it is hard to know where to start; others do not let you use optimal methods in the first place. If you are trying to use the best sharpening settings – including the lowest possible levels of noise and other artifacts – the ideal method is three-step sharpening.

Note: No matter the scene you photograph, you shouldn’t do any sharpening until you have made all your edits already! In other words, sharpening should be your final adjustment before making a print. (With Lightroom, and some other non-destructive post-processing software, this does not apply. Unlike many programs, the order of your Lightroom adjustments has no effect whatsoever on the final image. As an example, cropping and then adding a vignette in Lightroom produces the same effect as adding a vignette and then cropping.)

Also, before I cover three-step sharpening, remember that it is impossible to restore detail that your equipment did not capture in the first place. Sharpening is nothing more than increasing the local contrast of details you already captured. Still, it is an important part of post-production, particularly if you are making prints.

Table of Contents

1) Step One: Deconvolution Sharpening

The first, fundamental type of sharpening is called deconvolution or capture sharpening. This, essentially, is how you undo the inherent softness of a photograph – softness caused by factors that are impossible to counteract in the field, such as the low-pass (anti-aliasing) filter in many cameras. Even if your camera doesn’t have a low-pass filter, like my D800e, deconvolution sharpening is still necessary.

Essentially, you can think of deconvolution sharpening as a faint grain applied across the entire image. This adjustment is not strong at all; it simply lays the groundwork for more extensive sharpening later.

To use deconvolution sharpening in practice, the most important point is to maintain restraint. This type of sharpening applies equally across every part of a photograph, including areas without any detail. If your settings are too aggressive, you may end up adding noise, grain, and other artifacts to low-detail areas of your photo.

In Practice

So, which deconvolution settings are ideal? That varies from photo to photo, as well the specific software you use. With Lightroom, deconvolution sharpening is very easy to apply. Set the radius as small as possible (0.5 pixels), the detail as high as possible (100) and the masking as low as possible (0). Then, adjust the sharpness value so that the amount of grain in low-detail areas is tolerable. For my D800e, this is typically between 20 and 40, depending upon the image. Photos that are filled completely with details (as in, no clouds or out-of-focus areas) will let you increase the sharpening value more than images with low-detail regions.

If you use Photoshop, I recommend doing the same adjustments in Camera Raw. However, if you prefer working within the main Photoshop workspace, you can do similar adjustments via the smart sharpen tool (among many, many others).

Pay attention to the areas without detail more than the areas with detail for deconvolution sharpening. Don’t be hesitant to increase the “sharpening” slider, but be careful not to add any unwanted grain or noise.

Also, if you are working in a program like Lightroom or Camera Raw, it is fine to do some noise reduction in this stage. For most programs, though, it is best to do noise reduction before you do any sharpening at all. (As mentioned in the introduction to this article, the order that you apply Lightroom adjustments doesn’t matter.)

Lastly, I recommend sharpening at 100% magnification, which maps a single pixel of your image to a single pixel of your display. If you must zoom in further to see your changes clearly, 200% and 400% also are acceptable; however, don’t zoom in to any unusual value, whatever software you use (i.e., a value of 90% or 234% is far from ideal). This also holds true for the next step, local sharpening.

Your deconvolution settings should not add appreciable grain or noise to the low-detail areas of these waves.

2) Step Two: Local Sharpening

The most important sharpening step is local or creative sharpening. Here, you are sharpening only areas that already have high levels of detail. The point of local sharpening is to increase the clarity of important areas, yet avoid adding unwanted noise and grain to low-detail regions.

Say, for example, that you are photographing a portrait. After you apply a light deconvolution sharpening across the entire image, you are ready to target more specific details. You might want to sharpen a model’s eyes, for example, without also sharpening the background. That’s local sharpening.

In Practice

There are countless ways to put local sharpening into practice. I could write a full article on each of Photoshop’s sharpening tools, including ones that aren’t obvious at first, and it still wouldn’t be comprehensive. For now, I’ll only list a couple for simplicity’s sake.

First, if necessary, you could just stay in Lightroom for all your local adjustments. Although there aren’t nearly as many options – in fact, the local sharpening tool only has a single slider – there are other benefits to doing all your edits in Lightroom. For one, Lightroom is non-destructive, meaning that you can re-adjust all your old edits at any time. Also, RAW files in Lightroom are smaller than TIFFs exported from Photoshop.

If you do choose to work in Photoshop, be sure to use layers and masking. I will write a tutorial on masking soon, but the basic premise is that masks let you apply an adjustment – like sharpening – to specific parts of a photo rather than the entire image.

When I use Photoshop, one of my preferred tools is Smart Sharpen. To do local sharpening in Photoshop, my workflow may look like this:

- Open the photo in Photoshop.

- Duplicate the layer.

- Apply Smart Sharpen to the top layer at the strongest setting that I might need.

- Add a white mask to the top layer.

- Decrease the amount of sharpening in low-detail regions by painting gray or black onto the mask.

- Flatten the layers and save the image.

Other programs with layers would be similar. If you don’t have Photoshop, consider using Nik’s free Sharpener Pro 3. (All of the Nik Suite is free.)

In this photo, I only applied local sharpening to the three pelicans and the moon. I masked out the sky completely.

3) Step Three: Output Sharpening

The final adjustment is output sharpening, which depends upon the specific material that you are using for a print. Output sharpening is simply the additional sharpening that you apply to counteract high levels of texture in a print.

Consider a canvas, aluminum, or matte print, for example. If you don’t add any additional sharpening, your photograph will be blurry simply because of the material you use! This isn’t as much of a problem with low-texture papers – say, glossy or metallic.

Even photographs intended for the web need some output sharpening. Every time that you downscale an image, your software needs to interpolate pixels and bin them together. In the process, you may lose detail; the best way to counteract this is to add a bit of extra sharpening

In Practice

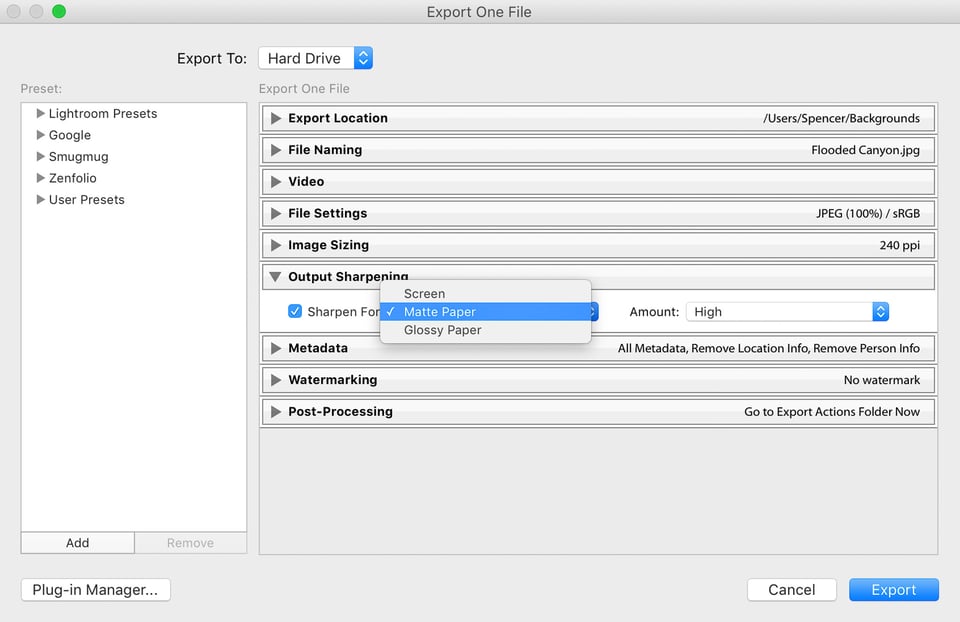

Usually, output sharpening is very easy to apply, since it is built into many programs already. Take a look at this screenshot from Lightroom, for example:

As you can see, Lightroom has built-in algorithms for output sharpening, which means you don’t need to do any of this manually! Simply export your photo from Lightroom, choosing the proper strength for whatever material you are using.

This may take some trial and error, and it does depend upon your print size as well as the material. Personally, for example, I prefer the “low” sharpening for large prints on glossy paper, but I use “standard” sharpening for web when I export to Photography Life. When in doubt, go for lower sharpening – it is easier to ruin a photo if your edits are too aggressive.

Also, if you are creating a print that is particularly important, you may not want to let Lightroom’s automatic software take over. Instead, consider adjusting the sharpness manually, either in Lightroom or Photoshop. I don’t do this for most images, but it may be necessary for certain, specialty prints (such as very small prints on canvas, which require stronger sharpening adjustments than any of Lightroom’s normal export presets allow).

Lastly, if you don’t want to use Lightroom, the free Nik Sharpener Pro 3 has an output sharpening tool that allows for more precise adjustments. I have never been unhappy with Lightroom’s export sharpening, but certainly some photographers will want more control.

4) When Does This Matter?

The three-step sharpening method is the ideal way to sharpen a photograph, no matter your subject. However, it is more important – as you may expect – if there are large areas without detail in a photograph.

Typically, the one-step sharpening method that many people use in Lightroom (or perhaps two-step if they also do output sharpening) is simply to adjust the sliders until the photo looks as good as possible. However, if parts of the photo are more detailed than others, you can’t expect great results from applying the same amount of adjustment to the entire image.

The masking tool in Lightroom and other software aims to fix this, and it does a decent job. However, it inevitably isn’t perfect. If your masking is too strong, details in the photo will look “crunchy” and uneven. If your masking is too weak, low-detail areas of your photo will grow too grainy and noisy.

Three-step sharpening is useful for landscape photographers, although not to the same degree. If I have clouds in a photo, there still isn’t a better way to avoid adding unwanted noise and artifacts. However, if I’m photographing a scene completely filled with detail (like the image below), I may be able to get away with just two-step sharpening: aggressive deconvolution sharpening, then output sharpening. It all depends upon the image.

Sharpening techniques can be a confusing topic, since there are so many different tools at your disposal. Hopefully, this article covers three-step sharpening in a way that is easy to implement in your own photos, regardless of your specific software.

Will you need to use these techniques for your own photography? It all depends upon your specific situation. If you tend to display photos online more than in person, the techniques in this article are unlikely to make a huge difference. However, if you want the sharpest possible images for a print show or exhibition, three-step sharpening is ideal.

Spencer, I studied your article and i found there many useful aspects of sharpening. Since I am a Nikon photographer since many years, I started my post processing “career” with Nikons software. And I confess: I’m still using Capture NX2 (not Capture NX-D)!

NX2 has a wonderful tool called “high-pass sharpening” wich only sharpens the edges within a picture. So you never are in trouble with grain or noise in flat parts of e picture.

I know that photoshop also have a high-pass sharpening, but this is a lousy tool. Unfortunately Nick Software died a couple of years ago and not even the Sharpener3 offers the high-pass sharpener.

For my jobs the high-pass sharpener of Capture NX 2 ist still the best sharpening you can have in the post processing market.

Klaus

One size does not fit all. All images are different.

High pass sharpening is only one of three methods of sharpening, the others being luminance and edge sharpening.

High pass has the advantage of simplicity and little accentuation noise. It uses a wide radius and the contour accentuation it produces is soft, wide and feathered. It’s effect is rather like the Clarity slider in LR but with more control. It is very useful for some images like ‘broad brush’ landscapes, but for high detail images is rarely ideal.

The Photoshop filter is with respect, not a lousy tool, it is superb and very flexible, but requires careful use in a number of stages. It is not a simple slider.

Thank you Spencer.

A terrific article and thanks to Betty and Pete (inter alia) for some energetic and constructive discussion.

Darce

Two questions from a dilettante in photography:

1) is there an easy way to make an out-of-focus photo (or area of a photo) come into sharp focus?

2) is there an easy way to sharpen a photo which has motion blur (i.e., where the camera was in motion, not the subject)?

Unfortunately, the answer to both those questions is no. Photoshop does have a motion blur reduction feature, but it doesn’t work well. You’d have much better luck trying to get the photo right in-camera.

Adding to what Spencer said, if you are using a conventional camera, the answer to both of your questions is no.

However, if you use a camera that captures the light-field, then you can selectively choose what you want to be in focus during post processing.

The Lytro camera does exactly that. See an example here: support.lytro.com/hc/en-us

Thanks. That’s pretty much what I expected from my engineering background in image processing, but I had hopes. I do appreciate the quick responses.

Hey Donb, if the blur is relatively small and linear, then you can use Topaz infocus: www.topazlabs.com/infocus

I think topaz labs has their inFocus software that can help with motion blur. Not sure how well it works but you can download a free trial.

Art,

Pete a has answered Part b of your question so I will deal with Part a.

TIFF is open format (although Adobe actually own it) and de facto the industry standard over a wide range of applications.

PSD is proprietary to Adobe and is no longer even truly their native format. It has no advantages and some disadvantages over TIFF.

PSD is generally not well recognised outside of Photoshop so not good if you want to send files out to a third party (e.g. print company). However, if you work exclusively in Photoshop, PSD will work fine.

Both support layers (although PSD files end up a little smaller).

In both PSD and TIFF, there’s an option known as “Maximize PSD and PSB File Compatibility”.

This is always used when saving a TIFF but an option for PSD and set in Photoshop preferences.

A copy of the entire layered document in a flattened state is stored within the document. If you use this option, the document size increases due to this embedded, flattened version of the image although a TIFF and PSD document will roughly be the same size when this option is applied. This allows other products; both those by Adobe and 3rd party, to view the image composite even though they may not necessarily have access to the individual layers as not all TIFF readers support layered documents. However, all modern readers should have no issues reading the single, flattened copy. Even some Adobe applications like Bridge and Lightroom will require a flattened copy within the document for viewing, so my recommendation is to have this always set.

As you may have guessed, I use TIFF.

Fascinating and helpful article! Photography Life is on a roll.

Related Questions:

a. You mention outputting from Photoshop in TIFF. I have long wondered what are advantages and disadvantages of each format (e.g. PSD vs TIFF). Some preserve layers and others do not? Can you explain this briefly or suggest a resource? I have been inclined to use PSD thinking that it’s Adobe’s native format.

b. I stil tend to output full size JPEG’s for all purposes, figuring that downsizing for any particular application (e.g. web) will be best handled by the application itself built for that purpose. E.g., will my own attempts at downsizing really be better than Facebook’s? Have you done any experimenting and found that doing your own downsizing prior to export turns out visibly better for some applications? What parameters do you recommend for general web posting or Facebook in particular?

Thanks!

Art

Art, by far the most important editing step to get right when preparing images for sharing, especially via the Web and via e-mail, is to make sure that the images are not only in sRGB IEC 61966-2-1 format, but also that this profile is embedded in the image Exif data. Many Web browsers and image viewers either lack colour management or they don’t implement it properly.

As to whether it is best to rely on Facebook et al. to provide the image downsizing, these corporations have to carefully balance available CPU time, and its electricity cost, against image quality, which is an ever-changing trade-off with each new iteration of industrial-grade Web server and its software. These machines have processing power that totally boggles the mind of everyone who uses consumer-grade computing devices.

For general-purpose photographic JPEGs, I can’t think of a good reason for not using the image downsizing provided by the corporations. If we have prepared, say, a JPEG that consists of a highly-detailed diagram that is annotated with fine text, then it would be very easy to perform an experiment to decide whether to rely on the corporation’s server or on our own skills.

The bottom line is, I think, that any photographic image which can be easily ruined by a less-than-perfect downsizing algorithm, very likely has next to zero artistic merit.

Thank you Spencer for this excellent article. It always amazes me how an article reviewing gear gets lots of attention, for the most part most amateurs believe that a new camera or lens will make them a better photographer, but what they really need is advice like this. Keep them coming, saved it in my tips folder.

I very much agree.

Similarly, the Critique, Nature, etc, forums seem relatively poorly supported – even, dare I say it, by PL staff.

No input for a very long time.

There seems to be a much greater interest in equipment than in actual image making and sharing.

Why is that, I wonder?

How about a weekly/monthly contest in each category scored by reader votes to liven things up?

Spencer

A nice introductory article on sharpening workflow which establishes the importance of three pass sharpening for optimal sharpening.

One point I would question is your conflation of deconvolution and capture sharpening as if they were the same thing – they are not. I am not an expert but my understanding is that capture sharpening is a generic term for correcting the softness incurred in the image capture process. Deconvolution sharpening is just one method of sharpening among several.

It is not clear what algorithms are used by Adobe in their sharpening engine (for obvious reasons) nor at which point conventional unsharp mask (USM) sharpening is blended into deconvolution sharpening.

Also, capture sharpening settings are very specific to each camera model and even type and quality of the lens used, which all have specific characteristics and deficiencies – they are not all the same. Other factors such as noise and frequency of detail also come into play and obviously these are highly variable.

For best results, albeit at the cost of some extra work, many experts recommend carrying out capture sharpening during RAW conversion (after dealing with noise), creative sharpening in Photoshop and output sharpening automatically using an output preset suited to the substrate or medium.

Finally, your recommended settings for capture sharpening as the values you give seem more suited to the Fuji X-Trans sensor than most other (Bayer type) sensors with and without anti-aliasing filters. I would suggest this setting might unduly emphasise noise. But ultimately it’s a matter of judgement and taste so what works for is right for you.

All in all, it’s a bit of a minefield and I don’t envy you the job of writing about this subject!

Thanks for mentioning this, Betty — I didn’t really get a chance to elaborate in the article.

My understanding is that “true” deconvolution sharpening is impossible without highly specific data about the exact optical properties of your equipment. So, NASA can do it, but we can’t! However, putting the “detail” slider to 100 changes Lightroom’s algorithm to be as close as possible to deconvolution sharpening. At a value of zero, Lightroom’s algorithm is entirely unsharp mask; at 100, it is entirely “deconvolution.” Since the first step of sharpening should be deconvolution (or the closest possible), I considered it to be the same as capture sharpening. However, you are right, the two are not always the same!

The settings that I gave are very different from the ones you would use in a one- or two-step sharpening process. Deconvolution sharpening will only make faint changes to the lowest-level details of a photograph; step two is where the bulk of the sharpening takes place. The values I suggested definitely seem unusual, especially since Lightroom users have such a wide range of sharpening preferences in the first place! For the goal of pure deconvolution sharpening, though, I believe they are the closest you can get, regardless of sensor type. The “amount” slider will depend upon your sensor type, but the others should remain constant with any camera (although you could make a case for increasing masking slightly for high-ISO photos).

I definitely agree that the process you gave — capture sharpening during RAW conversion, creative in Photoshop, output automatically — is ideal. Although it is possible to do creative/local sharpening in Lightroom, that step is the one where you need to make the strongest adjustments, and Photoshop’s extra options are generally worth the effort.

Let me know if any of your experiences disagree with this, though. There isn’t much scientific information on sharpening available, and everyone seems to think that their personal method is the One True Sharpening Technique. This article reflects the best info I could find, but I’m definitely willing to update it if any verifiable information exists to the contrary.

Spencer, You are correct that “true” deconvolution requires “highly specific data about the exact optical properties of your equipment” to be used by the deconvolution kernel. To maximize depth of field and resolution, “true” deconvolution would be ideal; but for images that require a shallow depth of field, deconvolution would be counterproductive.

The first sharpening step needs to occur before all other image processing steps. Why? Because it affects the shape of the MTF curve. Too much sharpening creates a peak in the MTF curve, which translates into overshoot and ringing; too little sharpening results in a sagging MTF curve, which translates into blur and low resolution. Optimizing the trade-off between the flatness of the frequency response and the overshoot in the sampled domain is the first step to get right in *all* signal processing systems. The algorithms used in the subsequent processing steps are designed based on the assumption that this first step has been optimized — this is especially important to high-performance noise reduction algorithms. This is why Lightroom and some other editors, and Nikon’s RAW converters do not allow the user to change the processing order of the first set of image processing steps.

Fuji X-Trans seems particularly suited to deconvolution sharpening ( I don’t understand the technicalities, but it has something to do with the more randomised type of pixel array) and I use this in Iridient Developer for Fuji image files.

I will give your recipe (low Amount but high Detail) a try with Nikon NEF files (D800E like yours) in both Iridient and Adobe Camera Raw and see what happens.

Incidentally, with output sharpening for print, the sharpening is required not just to take account of paper texture, but more importantly, to counteract ‘dot gain’ – the spreading of each wet ink dot as it hits the paper and ‘soaks in’. Matte papers are more porous than gloss and so need more output sharpening to achieve the desired appearance.

Output sharpening is also required for the purpose of approximating the cardinal sine function [aka: sinc function; sin(x)/x] compensation, which is always required in the conversion from the sampled domain back to the analog domain of the real world (at our macroscopic level of perception).

The ‘dot gain’ that Betty mentioned increases the size of the output sampling aperture therefore the aperture size of the sinc function must be widened appropriately.

Sinc function compensation can be applied accurately only in the analog domain as the final step in the digital-to-analog reconstruction process, but this cannot be achieved in imaging because the required optical spatial filters don’t exist (they are wholly impractical to fabricate).

Sinc function compensation applied in the digital domain that takes the printing medium properly into account is theoretically possible in Lab colour space, but it would require a printer capable of printing negative values of lightness and higher levels of lightness than the printing paper has when no ink is applied to it. Negative lightness inks are physically impossible, which is why over-sharpening images in the final output sharpening editing step results in wasted ink and a lower resolution of shadow details; and, of course, the obnoxious ‘halos’ (overshoot and ringing) that surround all of the sharp transitions in brightness and/or colours between the objects in the scene.

Spencer thx for the useful tips. One doubt I have about the 3 step procedure you describe: if you always do deconvolution as step 1 where you basically fix all the available rulers in LR (amount, radius, detail), and then move to step2 that is a local sharpening ie where only the amount is available, you end up never using any different radius than 0.5, any different detail than 100 and never masking. DId I get this wrong? Thx

Your example of the order making no difference is wrong in many ways.

First of all you used the worst example – a vignetting darkens the corners of the photo, to put it simple.

If vignetting takes place first, and then the result is cropped, some or even all of the darkened corners can be cropped off, depending on the part you keep.

If you crop first, and then add vignetting, the corners of the remaining part – which is now the full photo – are darkened.

That’s a completely different effect.

Next thing – the order of applying certain effects can have a dramatic impact on the processor workload.

Crop an image to about 70 to 50% of the original size FIRST, and THEN apply a CPU intensive de-noising, filter or similar – and try the same the other way, apply that effect first and crop after. The VISIBLE result may be the same – but when a CPU intensive effect (or several) has to be applied to a part of the image that is later cut off ANYWAY, it would be totally dumb to crop only at the end of the post processing.

Peter, actually in Lightroom the order doesn’t matter. If you have Lightroom, I encourage you to test the vignette-crop example yourself. As I said in the article, this only applies to a few programs – in software like Photoshop, you are right that the order makes a huge difference.

Peter

You are right in principle but wrong in Lightroom.

The Lightroom engine stores all settings as you make them and renders them in the correct order on the fly in the background – so they are applied in the correct manner and order whether the user likes it or not!

Do you happen to know whether Lightroom applies NET changes, or does it do each change in the history panel separately?

For example, if I spend a few minutes adjusting global sharpness slider up and down, that might be a dozen steps in the history panel, but actually only one net change is necessary. I have been so concerned about this that I use the undo command (control-Z) after each change before making the subsequent adjustment. It would be great to know whether Lightroom does this for me by calculating a net change prior to application.

The issue is processing time. D mode on my 13″ MBPr is fast at first but bogs down substantially with heavily edited photographs. I wonder whether my new habit of using ctl-Z a lot is actually helping reduce processing time.

Thanks,

Art

As I understand it, the changes are net to whatever you have on the screen at the time. The changes are stored in the History panel (or rather the Lightroom Catalogue.lrdata folder) so you can easily revert to a previous adjustment – much the same as in Photoshop.

If you look at the History panel you will see two columns. The first column reflects the degree/amount of change since that slider was last used. The second column reflects the actual net change being applied to the image for that slider. So no, there is no need to do multiple undo’s.

Personally, once I get to an acceptable result or version, I make a Snapshot (temporary) or a Virtual Copy (more permanent), delete the history steps to that point and then continue on from that baseline to make further versions/interpretations..and so on.

Lightroom does unfortunately get bogged down with heavy processing especially when using the adjustment tools a lot. I have 32GB of memory on my MacPro and that slows down too – which is a good reason to hit Edit In and transfer to Photoshop.

This has always been an issue and probably always will be. Lightroom is a parametric editor. Global adjustments like Exposure, White Balance, etc will happen fast because they are calculated on the entire image at once. Localised adjustments like the brush and spot healing require much more effort to map to your photo. With larger RAW file sizes, this only gets worse and worse.The issue is with LR. It is simply not built to completely max out all of your resources.

One often overlooked setting that you can uncheck is under Catalog Settings. Disable the “automatically write changes to XMP”. This constant stream of writes for every minute adjustment you make can bog you down if you don’t have a very fast machine. You can also increase your Cache size. Or try turning GPU acceleration off. Or turn off ‘Enable Profile Corrections’ in the Lens Profile panel in the Develop module while you do your edits. Sometimes these steps help a bit – but mostly not!

I don’t think doing CTL-Z will make a blind bit of difference, in fact it may make things worse as you giving Lightroom yet more tasks to perform.

What about Local sharpening using the adjustment brush in Lightroom. You can select the area you want to sharpen and then do the Local sharpening adjustments just in that local area.

Hi Allen, I mentioned that in the second paragraph under “in practice.” Still, thanks for adding this — it is a very useful adjustment when you want to stay in Lightroom.

Oops. I now see you did mention it as having only one slider.

No worries!

Allen

Adjusting local sharpness in LR can be done with a brush and auto masking but it’s a bit limited in scope.

Basically, it’s ‘more of the same’.

PS (and other software) offer a far greater range of options.

It’s a very helpful tip.

Thank you, glad you found it useful!